Prerequisites

- Have read the Ray Novice Village Tasks to get to know the basics of the Ray framework.

- Have read Develop Universal Panel Based on Ray to get to know the basics of Ray panel development.

Create a panel

You can utilize the panel miniapp to develop and build an AI Audio Device Panel based on the Ray framework, implementing the following functionalities:

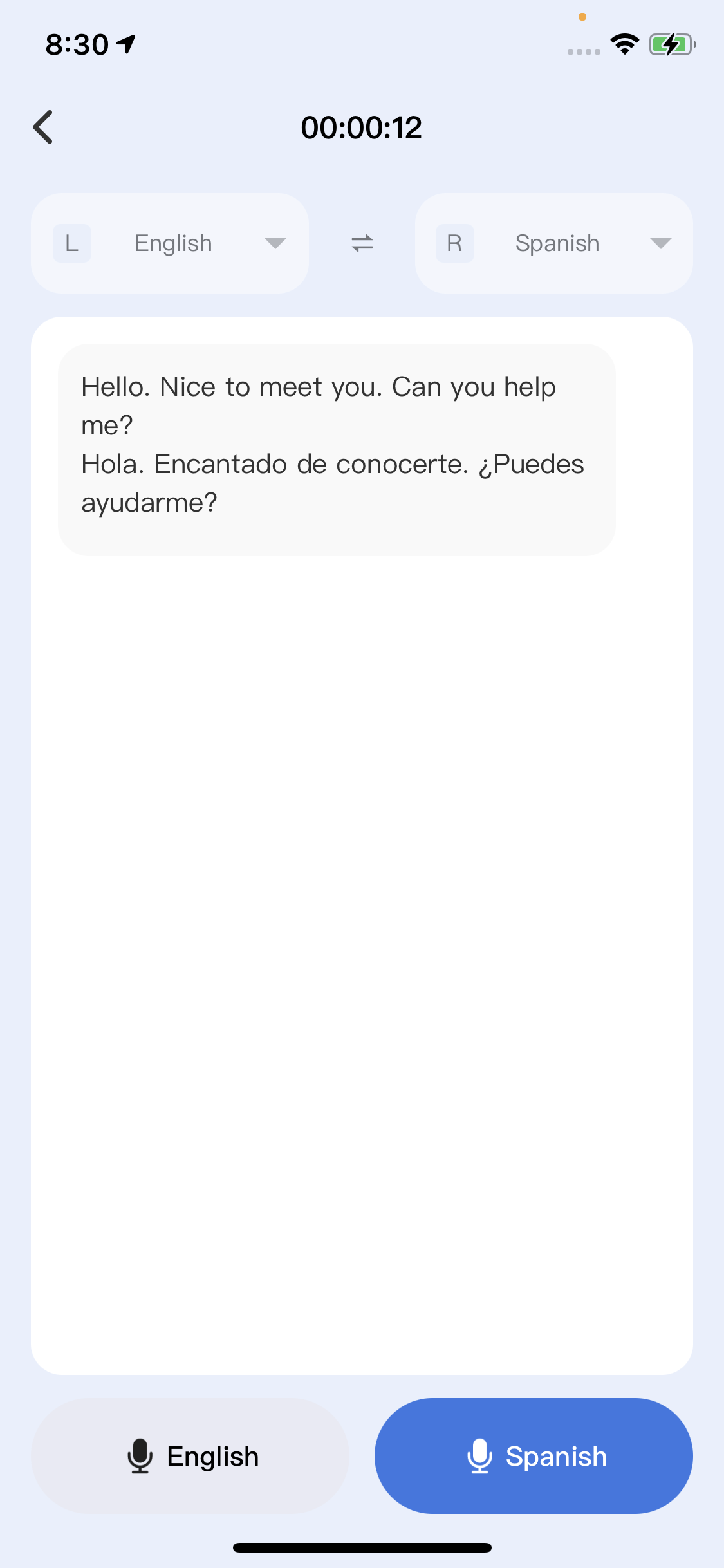

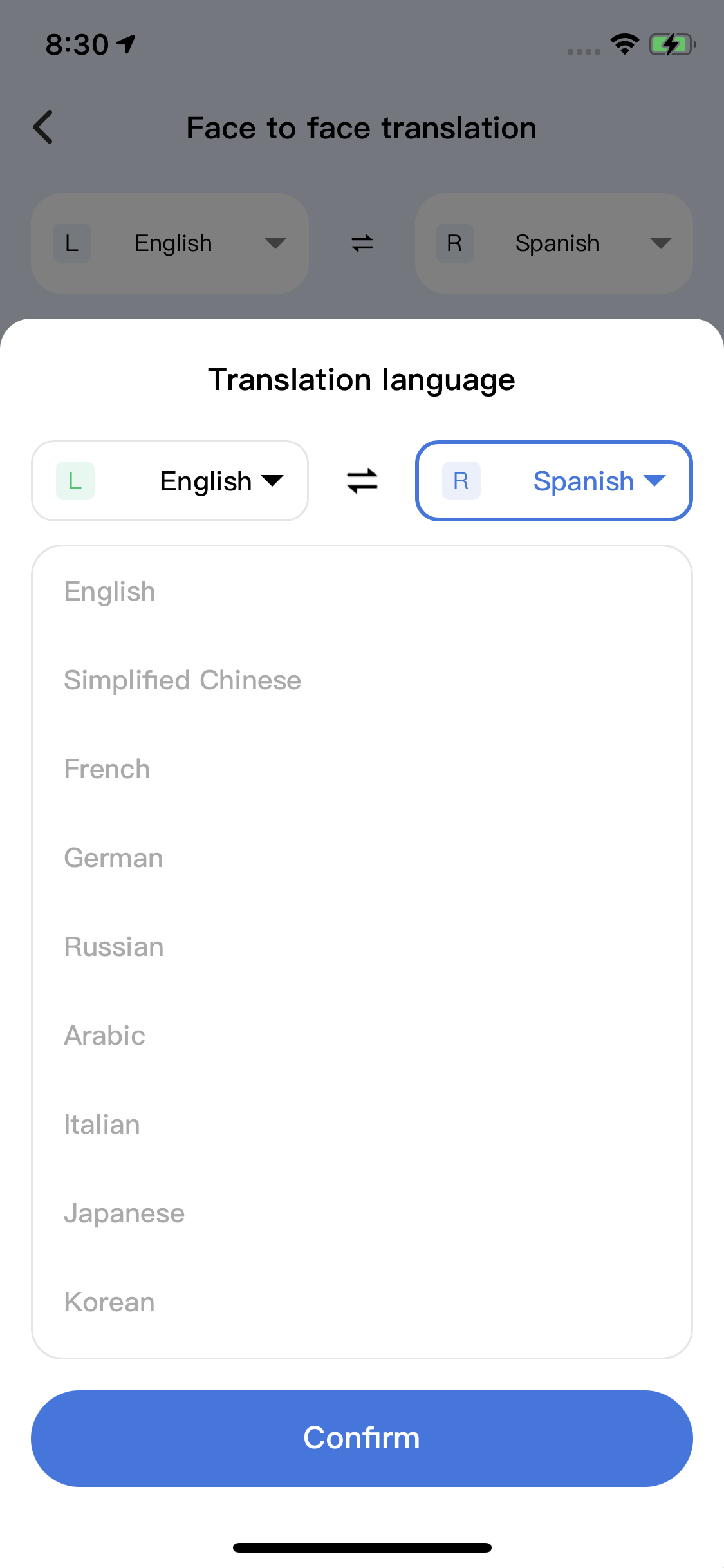

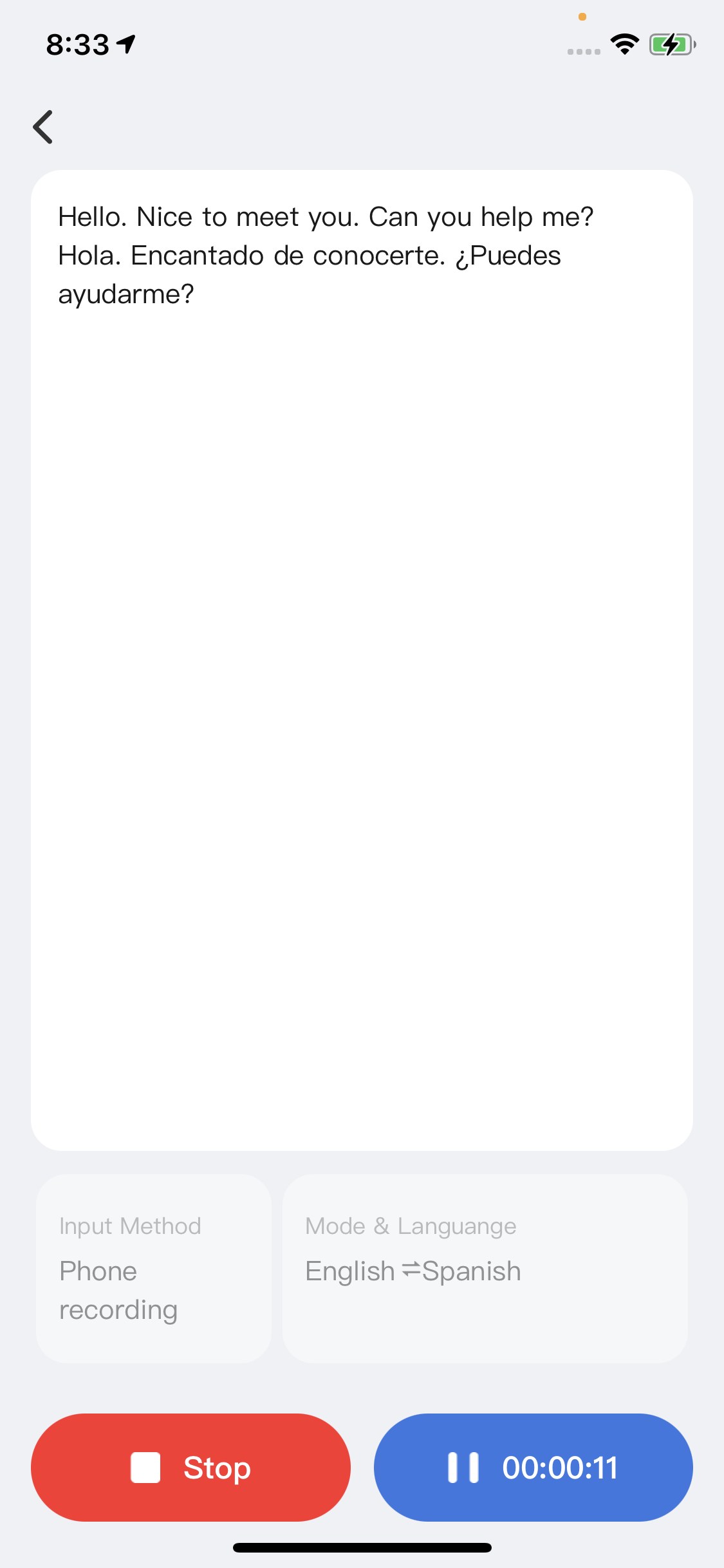

- Face-to-face translation: Select the desired language pair for conversion. During conversations between speakers of different languages, the app will automatically segment sentences, transcribe, and translate them. Then, the device will play the translated results.

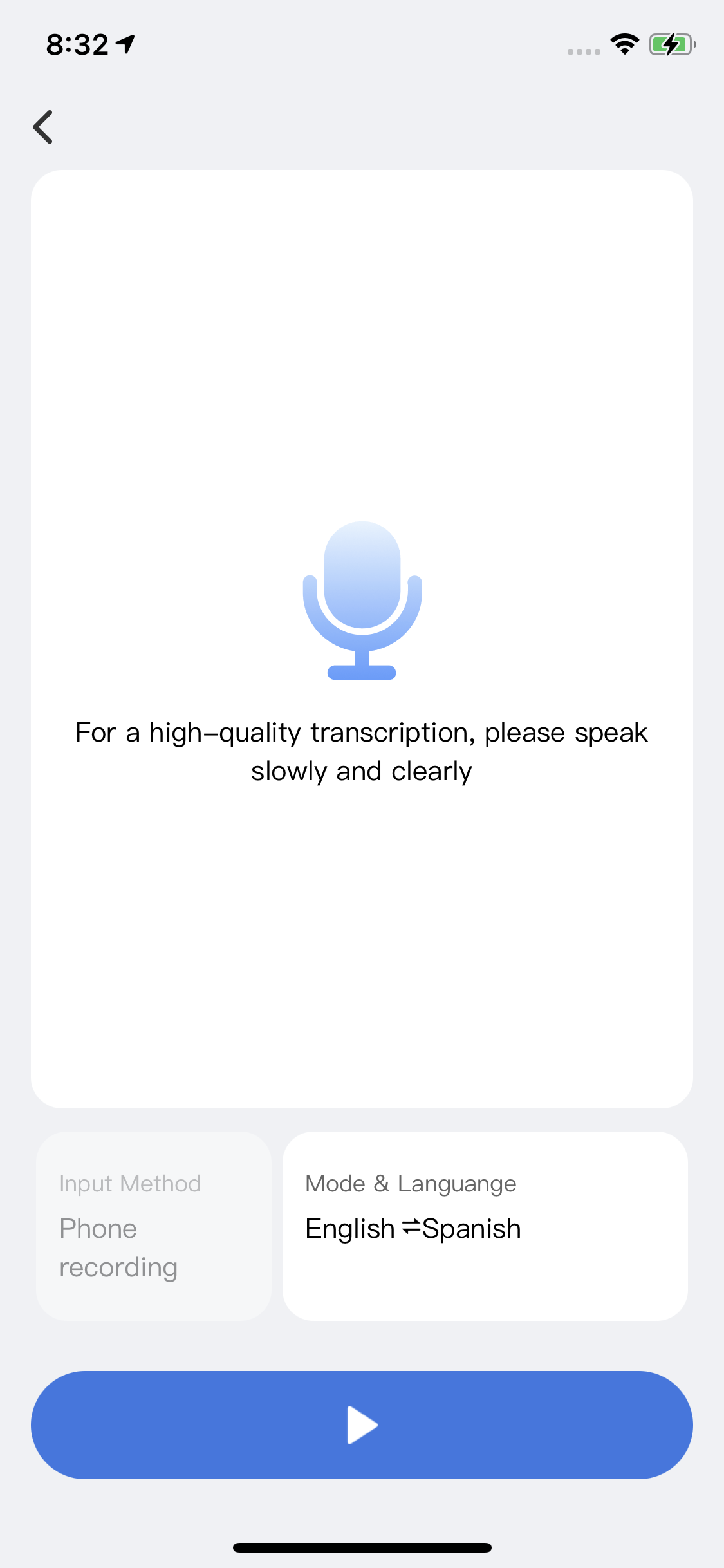

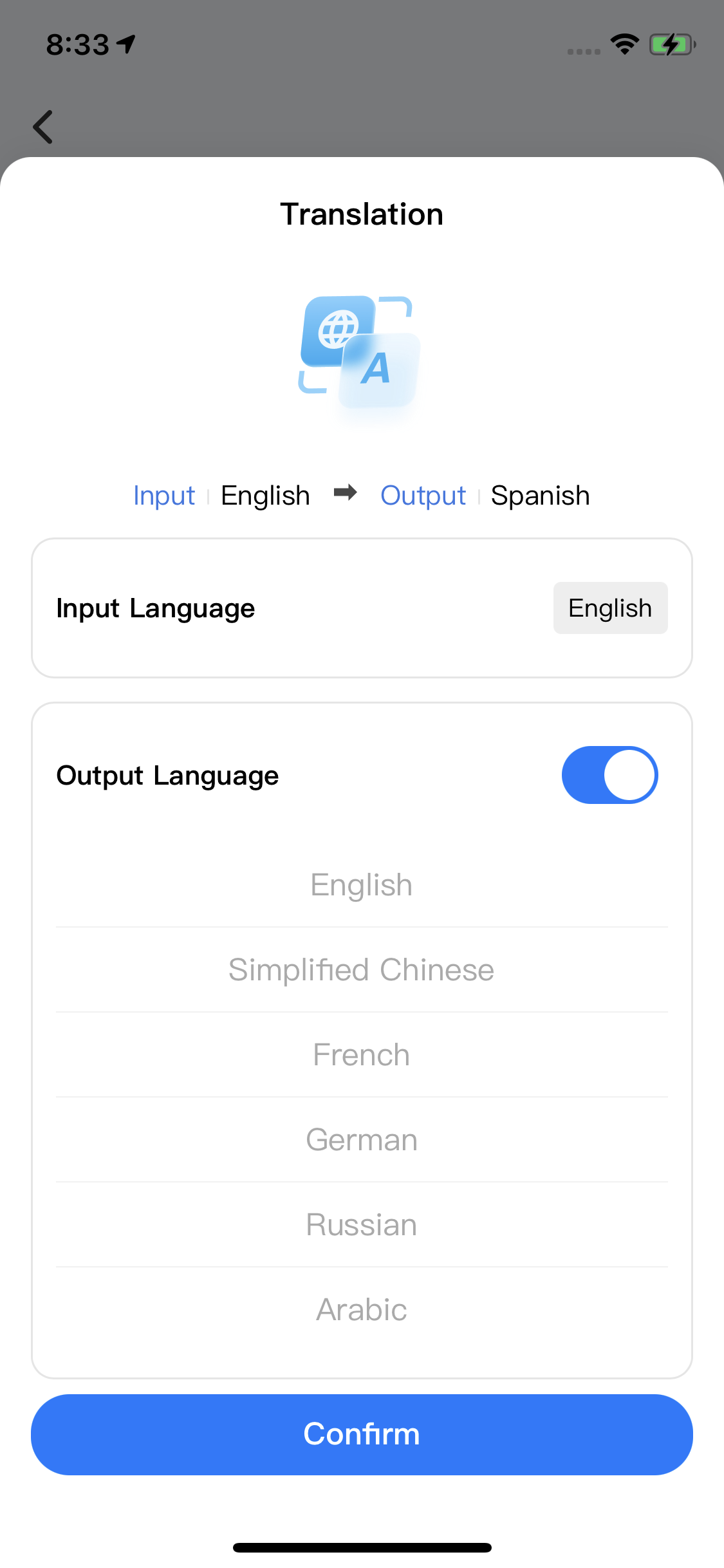

- Simultaneous interpretation: Set the input and target languages. After users tap "Start," the app will automatically segment sentences, transcribe the text, and perform real-time translation.

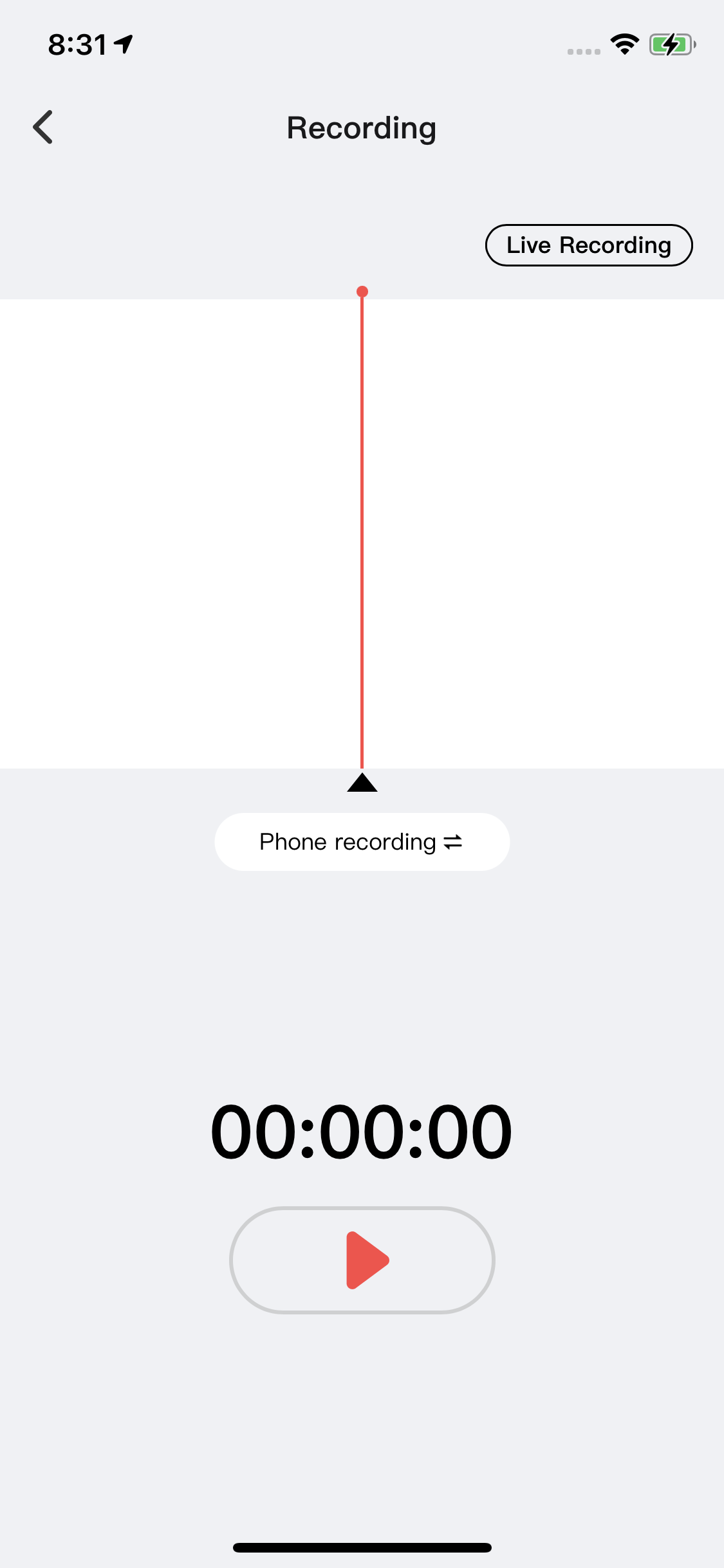

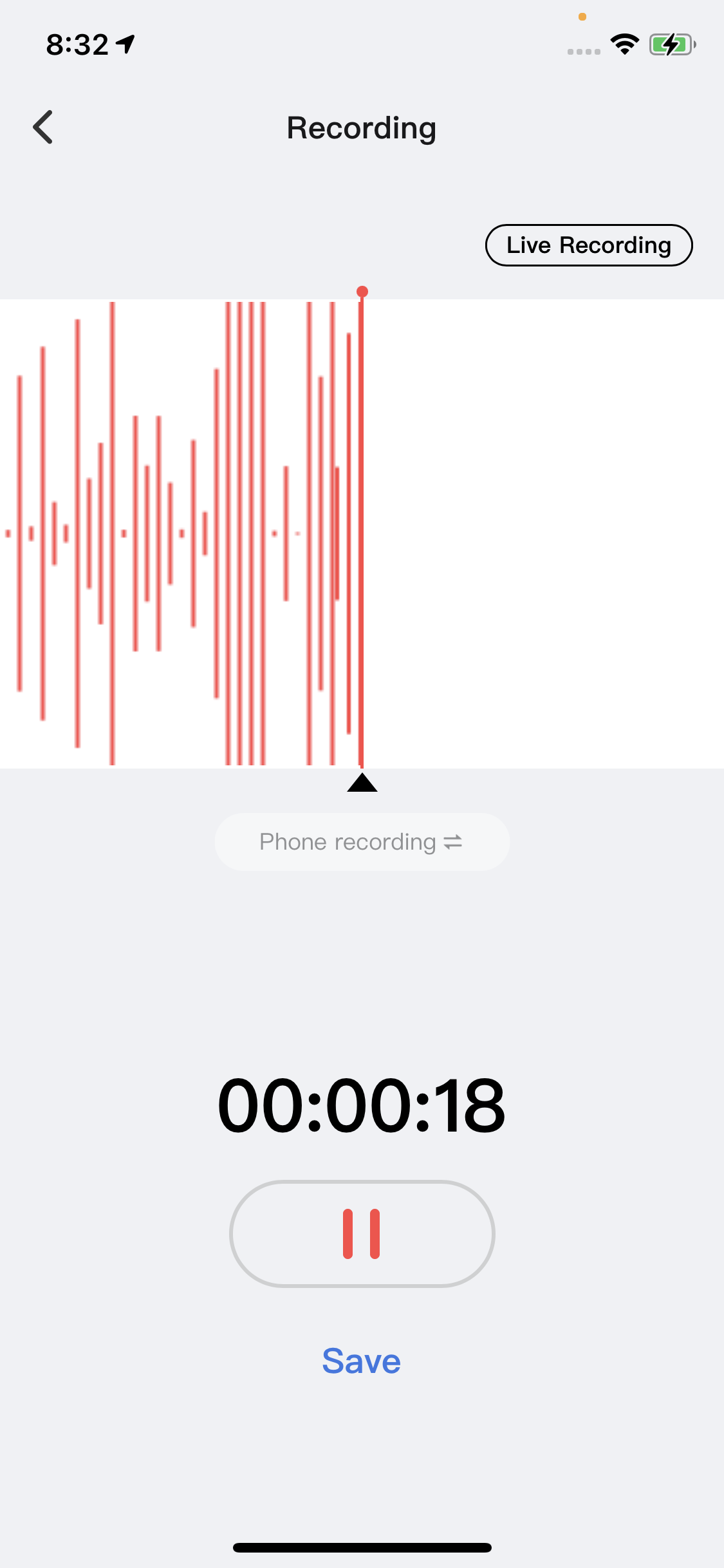

- Live recording: Place audio-capturing devices (such as headphones) on the table and tap "Start Recording" to capture live audio. After recording is completed, the audio can be transcribed and summarized by AI.

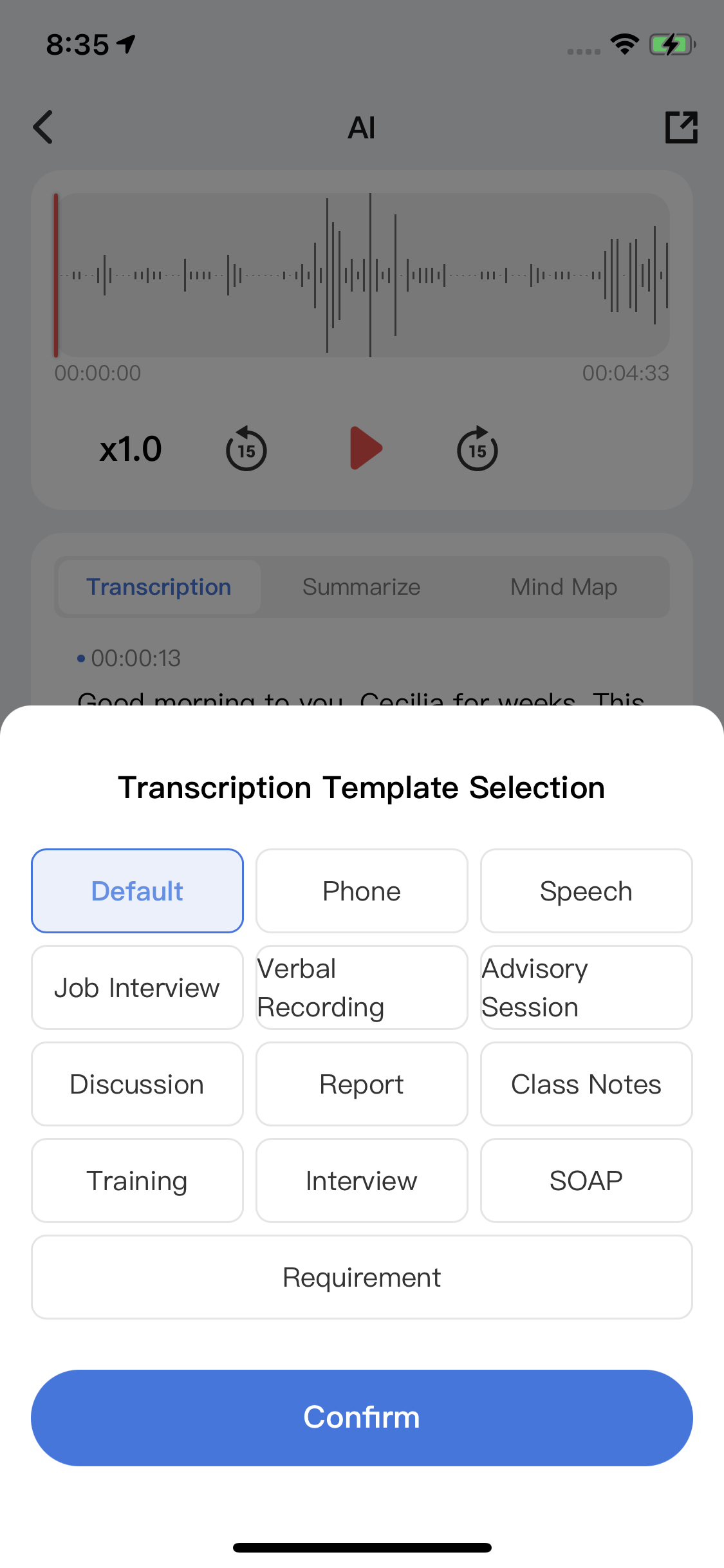

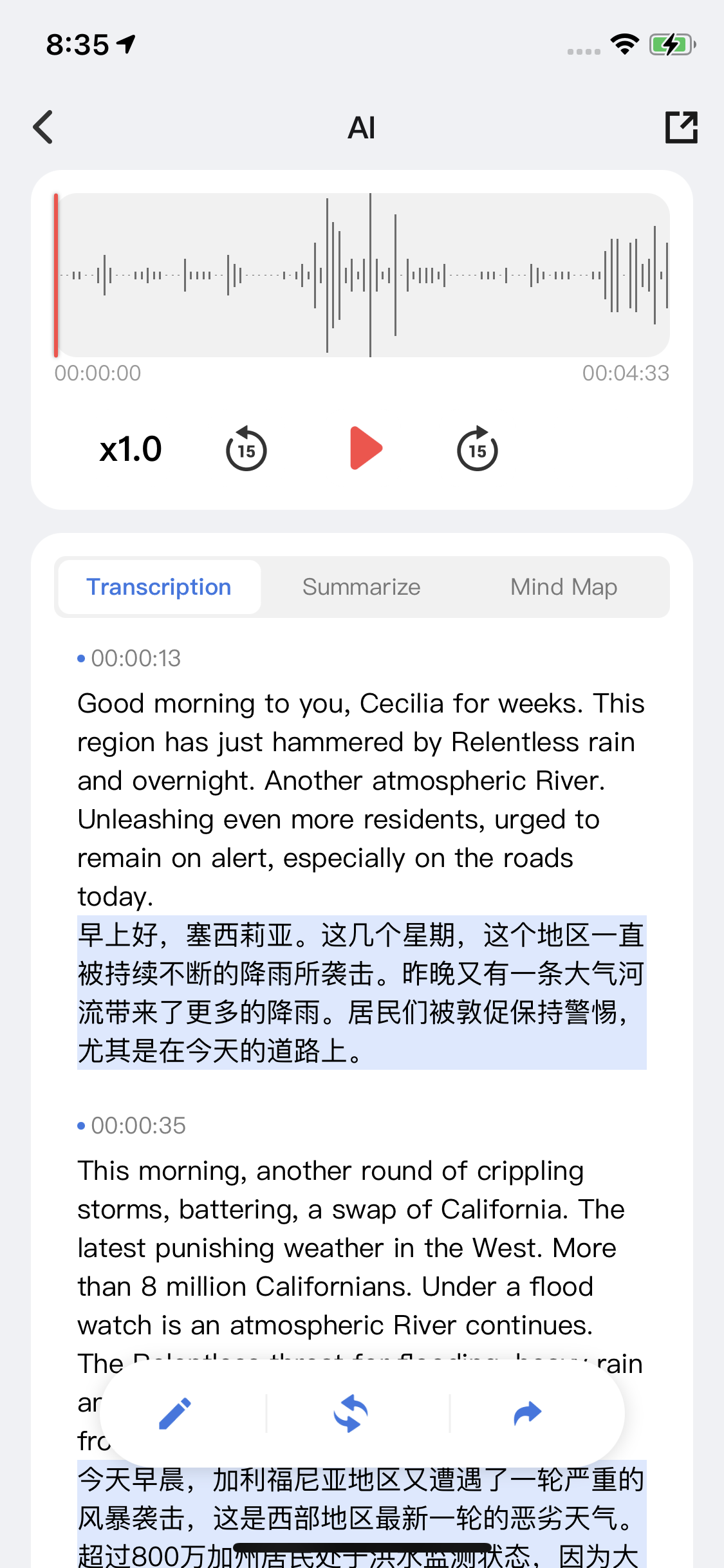

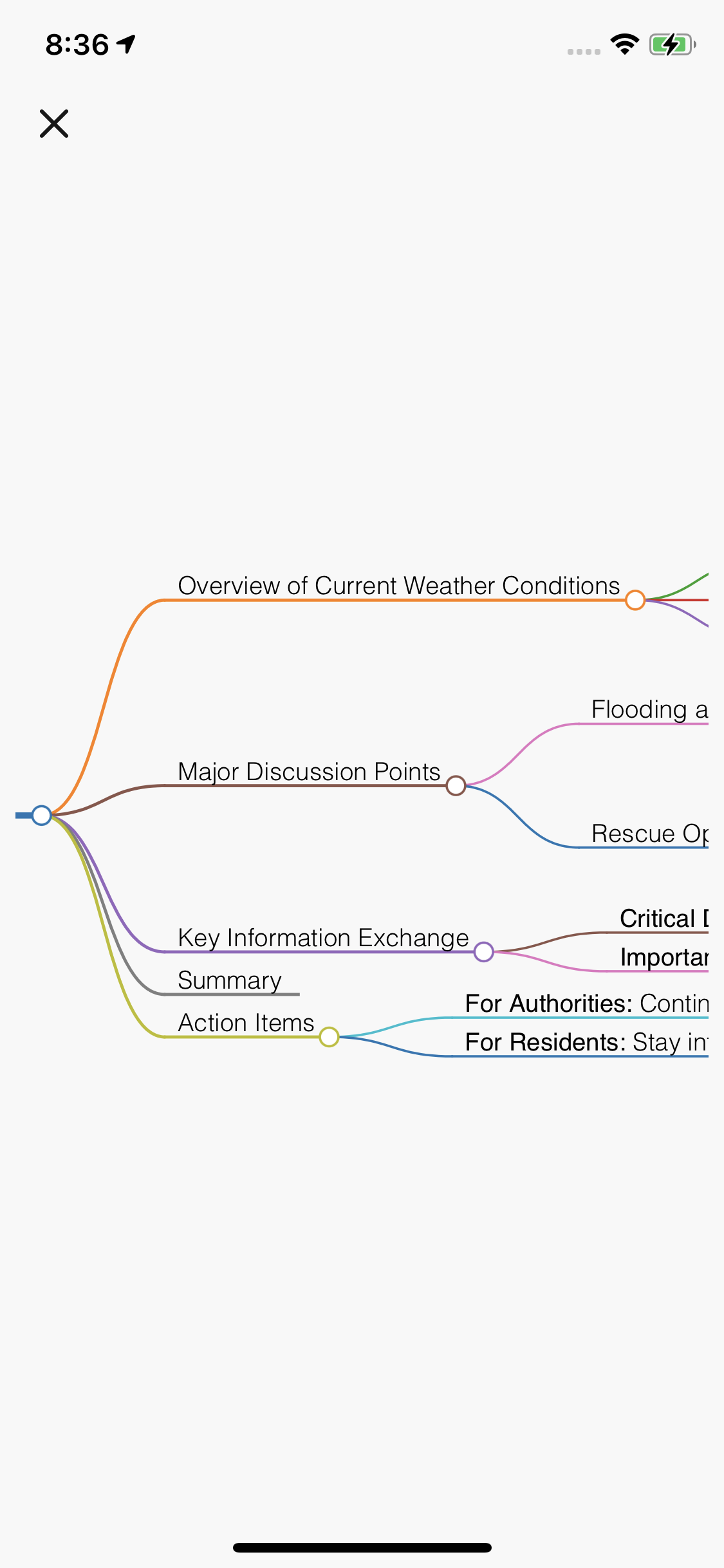

- Transcription and summary: Convert audio content into text, summarize it using AI, and generate Markdown-formatted text.

Prerequisites

- Smart Life app

- Tuya MiniApp IDE

- NVM and Node development environment (v18.x is recommended)

- Yarn dependency management tool

For more information, see Panel MiniApp > Set up environment.

A product defines the data points (DPs) of the associated panel and device. Before you develop a panel, you must create a product, define the required DPs, and then implement these DPs on the panel.

Register and log in to the Tuya Developer Platform and create a product.

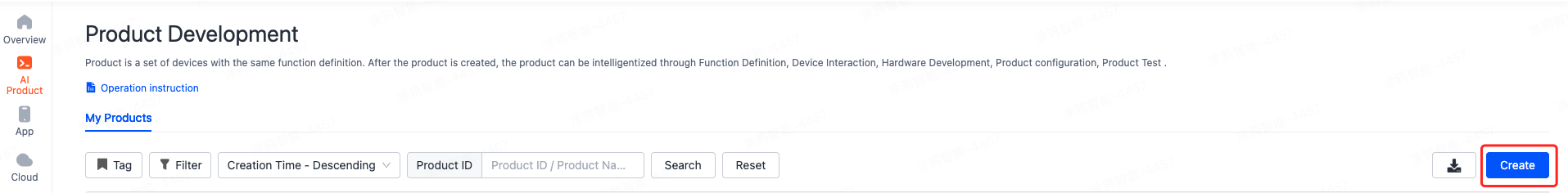

- In the left-side navigation pane, choose Product > Development > Create.

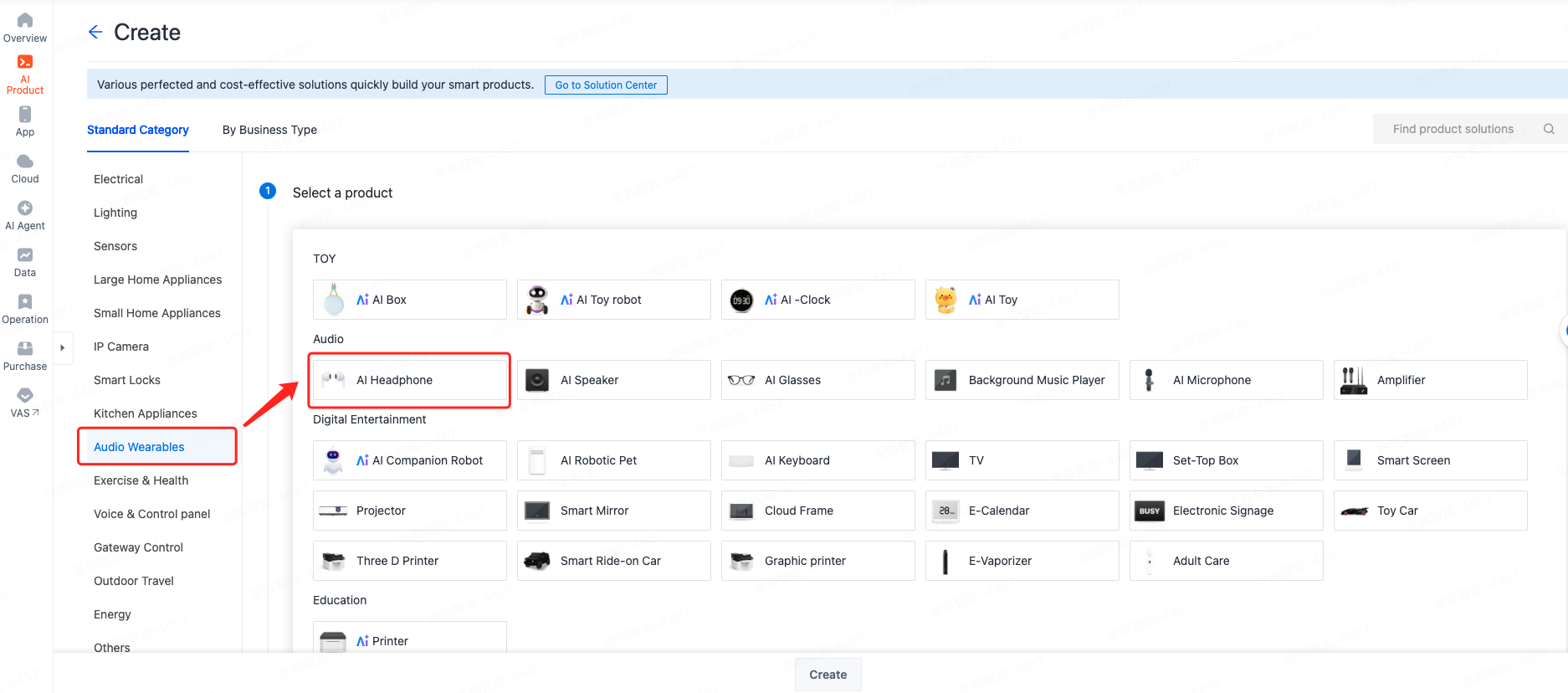

- Click the Standard Category tab and choose Audio Wearables > AI Headphone.

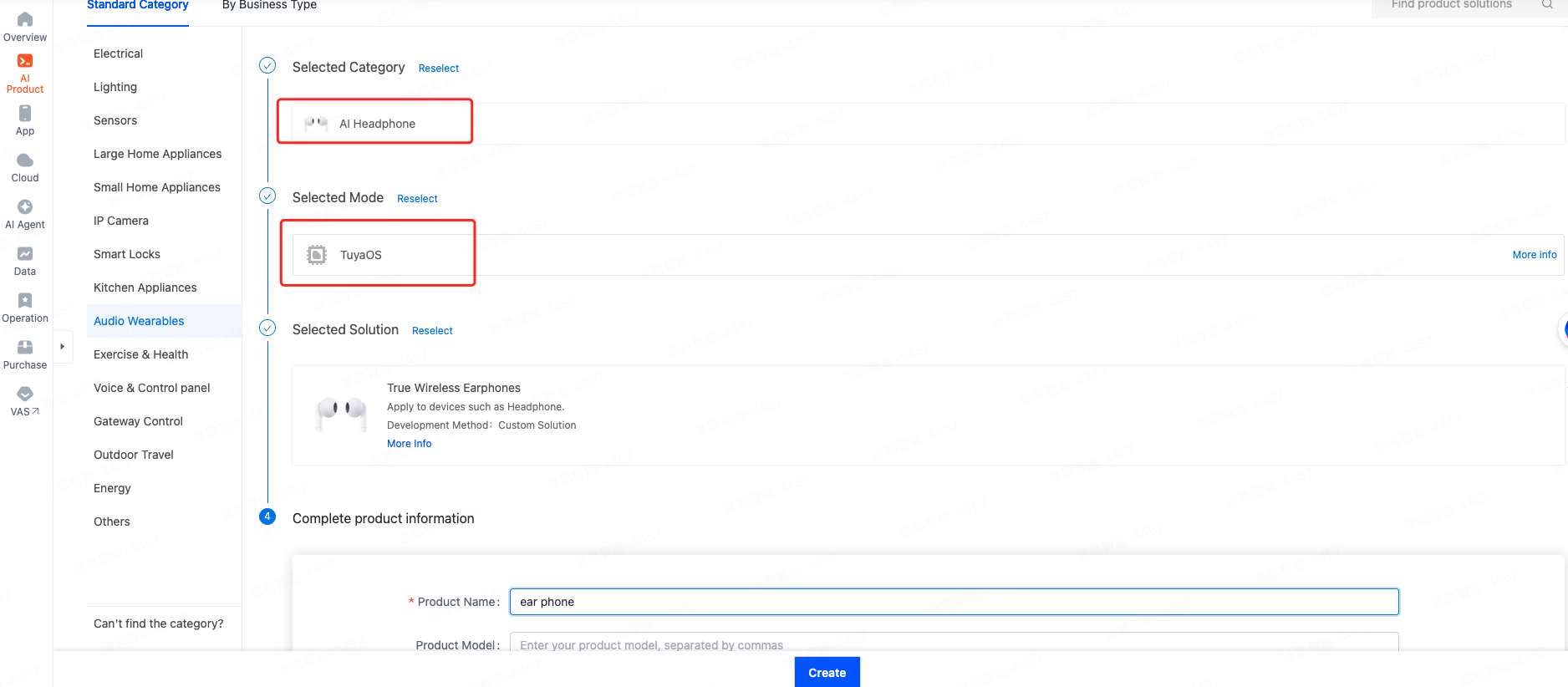

- Follow the prompts to select the smart mode and solution, complete the product information, and then click Create.

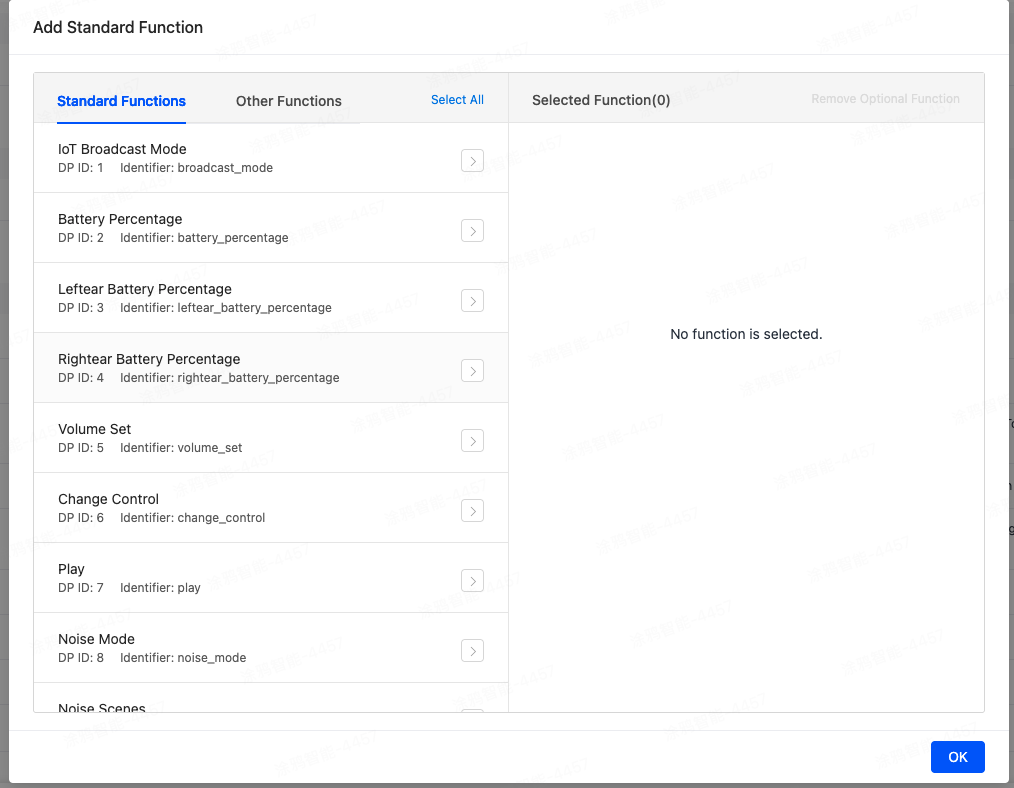

- On the page of Add Standard Function, you can select DPs based on your requirement and click OK.

Create panel miniapp on Smart MiniApp Developer Platform

Register and log in to the Smart MiniApp Developer Platform. For more information, see Create panel miniapp.

Create a project based on a template

Open Tuya MiniApp IDE and create a panel miniapp project based on the AI headphone template.

For more information, see Initialize project.

- App version

- Smart Life app v6.3.0 and later.

- Kit dependency

- BaseKit: v3.0.6

- MiniKit: v3.0.1

- DeviceKit: v4.14.0

- BizKit: v4.2.0

- WearKit: v1.1.6

- Baseversion: v2.27.0

- Dependent components

- @ray-js/ray^1.7.14

- Enable real-time cross-language communication, breaking language barriers for smooth multilingual user interactions.

- Automatically segment sentences, transcribe, and translate to improve communication efficiency.

- Devices can audibly output translations for natural conversational experiences.

- Support multi-language bidirectional conversion, adaptable to diverse scenarios, enhancing product intelligence and global compatibility.

Demonstration

Code snippet

// Start recording

const handleStartRecord = useCallback(

(type: 'left' | 'right') => {

const startRecordFn = async (type: 'left' | 'right') => {

// Keep the device online

if (!isOnline) return;

try {

showLoading({ title: '' });

const config: any = {

// The recording type. 0: call, 1: conference, 2: simultaneous interpretation, 3: face-to-face translation

recordType: 3,

// DP control timeout, in seconds

controlTimeout: 5,

// Streaming timeout, in seconds

dataTimeout: 10,

// 0: file transcription, 1: real-time transcription

transferType: 1,

// Indicate whether translation is required

needTranslate: true,

// Input language

originalLanguage: type === 'left' ? leftLanguage : rightLanguage,

// Output language

targetLanguage: type === 'left' ? rightLanguage : leftLanguage,

// The agent ID. Get agentId later based on the SDK provided

agentId: '',

// The recording channel. 0: Bluetooth low energy, 1: Bluetooth, 2: microphone

recordChannel: isCardStyle || isDevOnline === false ? 2 : 1,

// 0: left ear, 1: right ear

f2fChannel: type === 'left' ? 0 : 1,

// TTS stream encoding method. Write the stream to the headphone device after encoding. 0: opus_silk, 1: opus_celt

ttsEncode: isOpusCelt ? 1 : 0,

// Indicate whether TTS is required

needTts: true,

};

await tttStartRecord(

{

deviceId,

config,

},

true

);

setActiveType(type);

hideLoading();

setIntervals(1000);

lastTimeRef.current = Date.now();

} catch (error) {

ty.showToast({

title: Strings.getLang('error_simultaneous_recording_start'),

icon: 'error',

});

hideLoading();

}

};

ty.authorize({

scope: 'scope.record',

success: () => {

startRecordFn(type);

},

fail: e => {

ty.showToast({ title: Strings.getLang('no_record_permisson'), icon: 'error' });

},

});

},

[deviceId, isOnline, rightLanguage, leftLanguage]

);

// Pause

const handlePauseRecord = async () => {

try {

const d = await tttPauseRecord(deviceId);

setActiveType('');

console.log('pauseRecord', d);

setIntervals(undefined);

} catch (error) {

console.log('handlePauseRecord fail', error);

}

};

// Resume recording

const handleResumeRecord = async () => {

if (!isOnline) return;

try {

const d = await tttResumeRecord(deviceId);

setIntervals(1000);

lastTimeRef.current = Date.now();

} catch (error) {

console.log('handleResumeRecord fail', error);

}

};

// Stop recording

const handleStopRecord = async () => {

try {

showLoading({ title: '' });

const d = await tttStopRecord(deviceId);

hideLoading();

console.log('stopRecord', d);

backToHome(fromType);

} catch (error) {

hideLoading();

}

};

// Listen for ASR and translation results

onRecordTransferRealTimeRecognizeStatusUpdateEvent(handleRecrodChange);

// Handle ASR and translation

const handleRecrodChange = d => {

try {

const {

// The phase. 0: task, 4: ASR, 5: translation, 6: skill, 7: TTS

phase,

// The status of a specified stage. 0: not started, 1: in progress, 2: completed, 3: canceled

status,

requestId,

// The transcribed text

text,

// The error code

errorCode,

} = d;

// Receive and update requestId text in real time during the ASR phase

if (phase === 4) {

const currTextItemIdx = currTextListRef.current.findIndex(item => item.id === requestId);

if (currTextItemIdx > -1) {

const newList = currTextListRef.current.map(item =>

item.id === requestId ? { ...item, text } : item

);

currTextListRef.current = newList;

setTextList(newList);

} else {

if (!text) return;

const newList = [

...currTextListRef.current,

{

id: requestId,

text,

},

];

currTextListRef.current = newList;

setTextList(newList);

}

// In the translation return phase, receive and show the translation with a status value of 2, which means the completed translation.

} else if (phase === 5 && status === 2) {

let resText = '';

if (text && text !== 'null') {

if (isJsonString(text)) {

const textArr = JSON.parse(text);

const isArr = Array.isArray(textArr);

// When a numeric string like "111" is incorrectly identified as a JSON string by isJsonString, it will cause the .join() operation to fail.

resText = isArr ? textArr?.join('\n') : textArr;

} else {

resText = text;

}

}

if (!resText) {

return;

}

const newList = currTextListRef.current.map(item => {

return item.id === requestId ? { ...item, text: `${item.text}\n${resText}` } : item;

});

currTextListRef.current = newList;

setTextList(newList);

}

} catch (error) {

console.warn(error);

}

};

- Real-time translation delivers near-simultaneous conversation, significantly enhancing communication efficiency.

- The system supports multilingual bidirectional interpretation, making it ideal for international conferences, business negotiations, and diverse scenarios.

- Automated sentence segmentation, transcription, and translation minimize manual intervention while improving accuracy.

- Devices can audibly output translations for natural conversational experiences.

Demonstration

Code snippet

// Complete the recording configuration and start recording

const startRcordFn = async () => {

// Keep the device online

if (!isOnline) return;

// Show a prompt when translation is enabled but no target language is selected

if (needTranslate && !translationLanguage) {

showToast({

icon: 'none',

title: Strings.getLang('realtime_recording_translation_no_select_tip'),

});

return;

}

try {

setControlBtnLoading(true);

const config: any = {

// The recording type. 0: call, 1: conference

recordType: currRecordType,

// DP control timeout, in seconds

controlTimeout: 5,

// Streaming timeout, in seconds

dataTimeout: 10,

// 0: file transcription, 1: real-time transcription

transferType: 1,

// Indicate whether translation is required

needTranslate,

// Input language

originalLanguage: originLanguage,

// The agent ID. Get agentId later based on the SDK provided

agentId: '',

// The recording channel. 0: Bluetooth low energy, 1: Bluetooth, 2: microphone

recordChannel,

// TTS stream encoding method. Write the stream to the headphone device after encoding. 0: opus_silk, 1: opus_celt

ttsEncode: isOpusCelt ? 1 : 0,

};

if (needTranslate) {

// The target language

config.targetLanguage = translationLanguage;

}

await tttStartRecord({

deviceId,

config,

});

setInterval(1000);

lastTimeRef.current = Date.now();

setControlBtnLoading(false);

} catch (error) {

setControlBtnLoading(false);

}

};

// The callback invoked when the Start Recording button is tapped

const handleStartRecord = useCallback(async () => {

// Request recording permission

if (isBtEntryVersion) {

ty.authorize({

scope: 'scope.record',

success: () => {

startRcordFn();

},

fail: e => {

ty.showToast({ title: Strings.getLang('no_record_permisson'), icon: 'error' });

console.log('cope.record: ', e);

},

});

return;

}

startRcordFn();

}, [

deviceId,

isOnline,

controlBtnLoading,

currRecordType,

needTranslate,

originLanguage,

translationLanguage,

recordChannel,

isBtEntryVersion,

offlineUsage,

]);

// Pause

const handlePauseRecord = async () => {

if (controlBtnLoading) return;

try {

setControlBtnLoading(true);

const d = await tttPauseRecord(deviceId);

setInterval(undefined);

setControlBtnLoading(false);

} catch (error) {

console.log('fail', error);

setControlBtnLoading(false);

}

};

// Resume recording

const handleResumeRecord = async () => {

if (controlBtnLoading) return;

try {

setControlBtnLoading(true);

await tttResumeRecord(deviceId);

setInterval(1000);

lastTimeRef.current = Date.now();

setControlBtnLoading(false);

} catch (error) {

setControlBtnLoading(false);

}

};

// Stop

const handleStopRecord = async () => {

if (controlBtnLoading) return;

try {

ty.showLoading({ title: '' });

await tttStopRecord(deviceId);

setDuration(0);

setInterval(undefined);

ty.hideLoading({

complete: () => {

backToHome(fromType);

},

});

} catch (error) {

ty.hideLoading();

}

};

// Listen for ASR and translation results

onRecordTransferRealTimeRecognizeStatusUpdateEvent(handleRecrodChange);

// Handle ASR and translation

const handleRecrodChange = d => {

try {

const {

// The phase. 0: task, 4: ASR, 5: translation, 6: skill, 7: TTS

phase,

// The status of a specified stage. 0: not started, 1: in progress, 2: completed, 3: canceled

status,

requestId,

// The transcribed text

text,

// The error code

errorCode,

} = d;

// Receive and update requestId text in real time during the ASR phase

if (phase === 4) {

const currTextItemIdx = currTextListRef.current.findIndex(item => item.id === requestId);

if (currTextItemIdx > -1) {

const newList = currTextListRef.current.map(item =>

item.id === requestId ? { ...item, text } : item

);

currTextListRef.current = newList;

setTextList(newList);

} else {

if (!text) return;

const newList = [

...currTextListRef.current,

{

id: requestId,

text,

},

];

currTextListRef.current = newList;

setTextList(newList);

}

// In the translation return phase, receive and show the translation with a status value of 2, which means the completed translation.

} else if (phase === 5 && status === 2) {

let resText = '';

if (text && text !== 'null') {

if (isJsonString(text)) {

const textArr = JSON.parse(text);

const isArr = Array.isArray(textArr);

// When a numeric string like "111" is incorrectly identified as a JSON string by isJsonString, it will cause the .join() operation to fail.

resText = isArr ? textArr?.join('\n') : textArr;

} else {

resText = text;

}

}

if (!resText) {

return;

}

const newList = currTextListRef.current.map(item => {

return item.id === requestId ? { ...item, text: `${item.text}\n${resText}` } : item;

});

currTextListRef.current = newList;

setTextList(newList);

}

} catch (error) {

console.warn(error);

}

};

- Automatically record all ambient sounds to fully reproduce on-site communications, facilitating subsequent verification and review.

- Support audio transcription and AI-powered summarization to quickly extract key information and enhance data processing efficiency.

- Reduce manual documentation costs, prevent omission of critical details, and improve work and communication accuracy.

- Apply to meetings, lectures, interviews, and more, boosting product utility and intelligent performance.

Demonstration

Code snippet

// Complete the recording configuration and invoke app capabilities to start recording

const startRecordFn = async () => {

try {

setControlBtnLoading(true);

await tttStartRecord(

{

deviceId,

config: {

// Specify whether to keep the audio file when an error occurs

saveDataWhenError: true,

// The recording type. 0: call, 1: conference

recordType: currRecordType,

// DP control timeout, in seconds

controlTimeout: 5,

// Streaming timeout, in seconds

dataTimeout: 10,

// 0: file transcription, 1: real-time transcription

transferType: 0,

// The recording channel. 0: Bluetooth low energy, 1: Bluetooth, 2: microphone

recordChannel,

// TTS stream encoding method. Write the stream to the headphone device after encoding. 0: opus_silk, 1: opus_celt

ttsEncode: isOpusCelt ? 1 : 0,

},

},

);

setInterval(1000);

lastTimeRef.current = Date.now();

setControlBtnLoading(false);

} catch (error) {

setControlBtnLoading(false);

}

};

// The callback invoked when the Start Recording button is tapped

const handleStartRecord = useCallback(async () => {

// Request permission

if (isBtEntryVersion) {

ty.authorize({

scope: 'scope.record',

success: () => {

startRecordFn();

},

fail: e => {

ty.showToast({ title: Strings.getLang('no_record_permisson'), icon: 'error' });

},

});

return;

}

startRecordFn();

}, [currRecordType, recordChannel, isBtEntryVersion]);

// Pause

const handlePauseRecord = async () => {

try {

setControlBtnLoading(true);

await tttPauseRecord(deviceId);

setInterval(undefined);

setControlBtnLoading(false);

} catch (error) {

console.log('fail', error);

setControlBtnLoading(false);

}

};

// Resume recording

const handleResumeRecord = async () => {

try {

setControlBtnLoading(true);

await tttResumeRecord(deviceId);

setInterval(1000);

lastTimeRef.current = Date.now();

setControlBtnLoading(false);

} catch (error) {

setControlBtnLoading(false);

}

};

// Stop

const handleStopRecord = async () => {

try {

ty.showLoading({ title: '' });

await tttStopRecord(deviceId);

setDuration(0);

setInterval(undefined);

ty.hideLoading();

backToHome();

} catch (error) {

ty.hideLoading();

}

};

- Transcribe audio content into editable text for easy archival and further processing.

- Leverage AI technology to summarize transcribed content based on selected templates, rapidly extracting key information to enhance data retrieval efficiency.

- Reduce manual organization and reading costs while preventing omission of critical content.

- Generate structured Markdown-formatted text for streamlined document archiving and sharing.

- Apply to multiple scenarios, including meeting minutes, call transcripts, face-to-face conversations, and lecture notes, making your product more practical and smart.

Demonstration

Code snippet

{/* Transcription results */}

<View className={styles.content}>

<Tabs.SegmentedPicker

activeKey={currTab}

tabActiveTextStyle={{

color: 'rgba(54, 120, 227, 1)',

fontWeight: '600',

}}

style={{ backgroundColor: 'rgba(241, 241, 241, 1)' }}

onChange={activeKey => {

setCurrTab(activeKey);

}}

>

<Tabs.TabPanel tab={Strings.getLang('recording_detail_tab_stt')} tabKey="stt" />

<Tabs.TabPanel tab={Strings.getLang('recording_detail_tab_summary')} tabKey="summary" />

<Tabs.TabPanel

tab={Strings.getLang('recording_detail_tab_mind_map')}

tabKey="mindMap"

/>

</Tabs.SegmentedPicker>

{currTab === 'stt' && (

<SttContent

playerStatus={playerStatus}

wavFilePath={recordFile?.wavFilePath}

transferStatus={transferStatus}

sttData={sttData}

recordType={recordFile?.recordType}

currPlayTime={currPlayTime}

onChangePlayerStatus={status => {

setPlayerStatus(status);

}}

innerAudioContextRef={innerAudioContextRef}

isEditMode={isEditMode}

onUpdateSttData={handleUpdateSttData}

/>

)}

{currTab === 'summary' && (

<SummaryContent summary={summary} transferStatus={transferStatus} />

)}

{currTab === 'mindMap' && (

<MindMapContent summary={summary} transferStatus={transferStatus} />

)}

{(transferStatus === TRANSFER_STATUS.Initial ||

transferStatus === TRANSFER_STATUS.Failed) &&

!(currTab === 'stt' && recordFile?.transferType === TransferType.REALTIME) && (

<>

<EmptyContent type={EMPTY_TYPE.NO_TRANSCRIPTION} />

<Button

className={styles.generateButton}

onClick={() => {

// Select a template

setShowTemplatePopup(true);

}}

>

<Text className={styles.generateText}>{Strings.getLang('generate')}</Text>

</Button>

</>

)}

</View>

// Start transcription and summary

const handleStartTransfer = async (selectTemplate: TRANSFER_TEMPLATE) => {

if (isLoading.current) return;

try {

isLoading.current = true;

ty.showLoading({ title: '' });

await tttTransfer({

recordTransferId: currRecordTransferId.current,

template: selectTemplate,

language: recordFile?.originalLanguage || language,

});

setTransferStatus(TRANSFER_STATUS.Processing);

const fileDetail: any = await tttGetFilesDetail({

recordTransferId: currRecordTransferId.current,

amplitudeMaxCount: 100,

});

setRecordFile(fileDetail);

dispatch(updateRecordTransferResultList());

ty.hideLoading();

isLoading.current = false;

} catch (error) {

console.log(error);

dispatch(updateRecordTransferResultList());

ty.hideLoading();

isLoading.current = false;

}

};

// Get transcription and summary details

const getFileDetail = async () => {

// Loading

const finishLoading = () => {

ty.hideLoading();

isLoading.current = false;

};

try {

isLoading.current = true;

ty.showLoading({ title: '' });

const recordTransferId = currRecordTransferId.current;

// Get the recording details

const fileDetail = await tttGetFilesDetail({

recordTransferId,

amplitudeMaxCount: 100,

});

if (fileDetail) {

setRecordFile(fileDetail);

const { storageKey, transfer, visit, status, recordId, transferType } = fileDetail;

if (!visit) {

updateFileVisitStatus();

}

setTransferStatus(transfer);

// Get real-time transcription data directly from the app interface

if (transferType === TransferType.REALTIME) {

// Get the transcription data

const realTimeResult: any = await tttGetRecordTransferRealTimeResult({

recordId,

});

const { list } = realTimeResult;

const newData = list

.filter(item => !!item?.asr && item?.asr !== 'null')

.map(item => ({

asrId: item.asrId,

startSecond: Math.floor(item.beginOffset / 1000),

endSecond: Math.floor(item.endOffset / 1000),

text: item.asr,

transText: item.translate,

channel: item.channel,

}));

setSttData(newData);

originSttData.current = newData;

// Get local summary data on the client

tttGetRecordTransferSummaryResult({

recordTransferId,

from: 0,

}).then((d: any) => {

if (d?.text) {

resolveSummaryText(d?.text);

}

});

// Get summary data on the cloud

tttGetRecordTransferSummaryResult({

recordTransferId,

from: 1, // The cloud

}).then((d: any) => {

if (d?.text) {

tttSaveRecordTransferSummaryResult({ recordTransferId, text: d?.text });

resolveSummaryText(d?.text);

}

});

} else {

// status indicates the file synchronization status. 0: not uploaded, 1: uploading, 2: successfully uploaded, 3: upload failed

// transfer indicates the transcription status. 0: not transcribed, 1: transcribing, 2: successfully transcribed, 3: transcription failed

if (status === 2 && transfer === 2) {

// Get local transcription data on the client

tttGetRecordTransferRecognizeResult({

recordTransferId,

from: 0, // Local

}).then((d: any) => {

if (d?.text) {

resolveSttText(d?.text);

}

});

// Get transcription data on the cloud

tttGetRecordTransferRecognizeResult({

recordTransferId,

from: 1, // The cloud

}).then((d: any) => {

if (d?.text) {

// Cache locally on the client

tttSaveRecordTransferRecognizeResult({ recordTransferId, text: d?.text });

resolveSttText(d?.text);

}

});

// Get local summary data on the client

tttGetRecordTransferSummaryResult({

recordTransferId,

from: 0,

}).then((d: any) => {

if (d?.text) {

resolveSummaryText(d?.text);

}

});

// Get summary data on the cloud

tttGetRecordTransferSummaryResult({

recordTransferId,

from: 1, // The cloud

}).then((d: any) => {

if (d?.text) {

tttSaveRecordTransferSummaryResult({ recordTransferId, text: d?.text });

resolveSummaryText(d?.text);

}

});

}

}

finishLoading();

}

} catch (error) {

console.log('error', error);

finishLoading();

}

};

- Congrats! 🎉You have finished learning this guide.

- If you have any problem during the development, you can contact Tuya's Smart MiniApp team for troubleshooting.