Prerequisites

- Have read the Ray Novice Village Tasks to get to know the basics of the Ray framework.

- Have read Develop Universal Panel Based on Ray to get to know the basics of Ray panel development.

- The AI Video Highlight Template is developed with the Smart Device Model (SDM). For more information, see the documentation of the Smart Device Model.

Development environment

For more information, see Panel MiniApp > Set up environment.

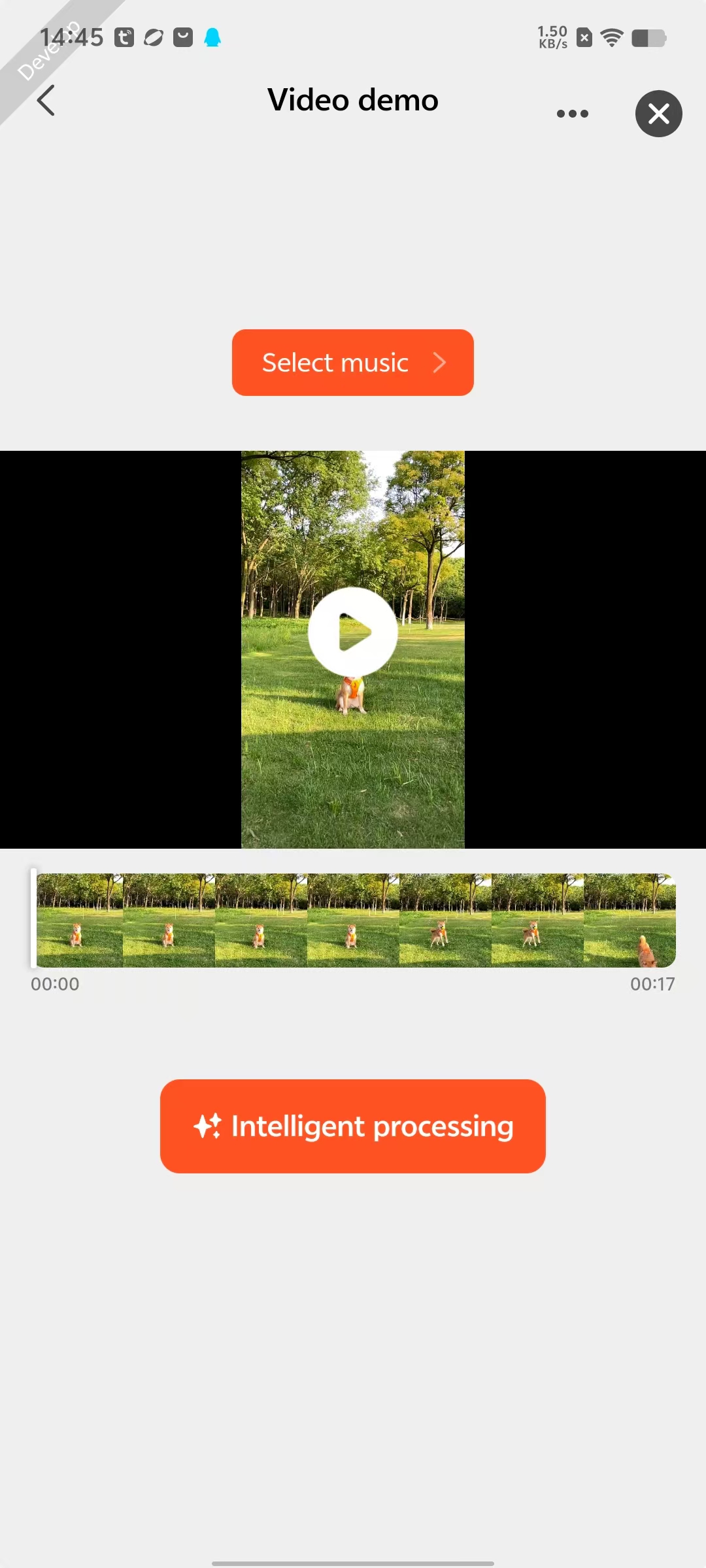

In complex video scenes, leverage AI technology to identify and highlight key subjects (such as pets and portraits) to enhance visual focus.

Features

- Video interaction features:

- Import video

- Export video

- Render the first frame

- Play/pause video

- Show a progress bar

- Audio interaction features:

- Get the default background music

- Show a list of default background music

- Preview the default background music

- AI video stream processing features:

- Highlight pets (supports more than 80 kinds of animals, including cats, dogs, and birds)

- Highlight humans

- Add background music to video

- Adjust the weight of the audio mix (video soundtrack and background music)

Create panel miniapp on Smart MiniApp Developer Platform

Register and log in to the Smart MiniApp Developer Platform. For more information, see Create panel miniapp.

Create a project based on a template

Open the Tuya MiniApp IDE and create a panel miniapp project based on the AI video highlight template.

For more information, see Initialize project.

By now, the initialization of the development of a panel miniapp is completed. The following section shows the project directories.

├── src

│ ├── api // Aggregate file of all cloud API requests of the panel

│ ├── components

│ │ ├── GlobalToast // Global toast

│ │ ├── IconFont // SVG icon container component

│ │ ├── MusicSelectModal // Background music selection dialog

│ │ ├── TopBar // Top bar

│ │ ├── TouchableOpacity // Clickable button component

│ │ ├── Video // Video container component

│ │ ├── VideoSeeker // Video slider component

│ ├── constant

│ │ ├── dpCodes.ts // dpCode constant

│ │ ├── index.ts // Stores all constant configurations

│ ├── devices // Device model

│ ├── hooks // Hooks

│ ├── i18n // Multilingual settings

│ ├── pages

│ │ ├── home // Homepage

│ │ ├── AISkillBtn // AI video processing button component

│ │ ├── MusicSelectBtn // Background music selection button component

│ ├── redux // redux

│ ├── res // Resources, such as pictures and SVG

│ ├── styles // Global style

│ ├── types // Define global types

│ ├── utils // Common utility methods

│ ├── app.config.ts

│ ├── app.less

│ ├── app.tsx

│ ├── composeLayout.tsx // Handle and listen for the adding, unbinding, and DP changes of sub-devices

│ ├── global.config.ts

│ ├── mixins.less // Less mixins

│ ├── routes.config.ts // Configure routing

│ ├── variables.less // Less variables

- Data center: Available in all data centers

- App version: Tuya Smart and Smart Life app v6.5.0 and later

- Kit dependency

- BaseKit: v3.0.6

- MiniKit: v3.0.1

- DeviceKit: v4.0.8

- BizKit: v4.2.0

- AIKit: v1.0.0-objectDetection.11

- baseversion: v2.19.0

- Dependent components

- @ray-js/panel-sdk: "^1.13.1"

- @ray-js/ray: "^1.6.1"

- @ray-js/ray-error-catch: "^0.0.25"

- @ray-js/smart-ui: "^2.1.5"

- @ray-js/cli: "^1.6.1"

- @ray-js/cli: "^1.6.1"

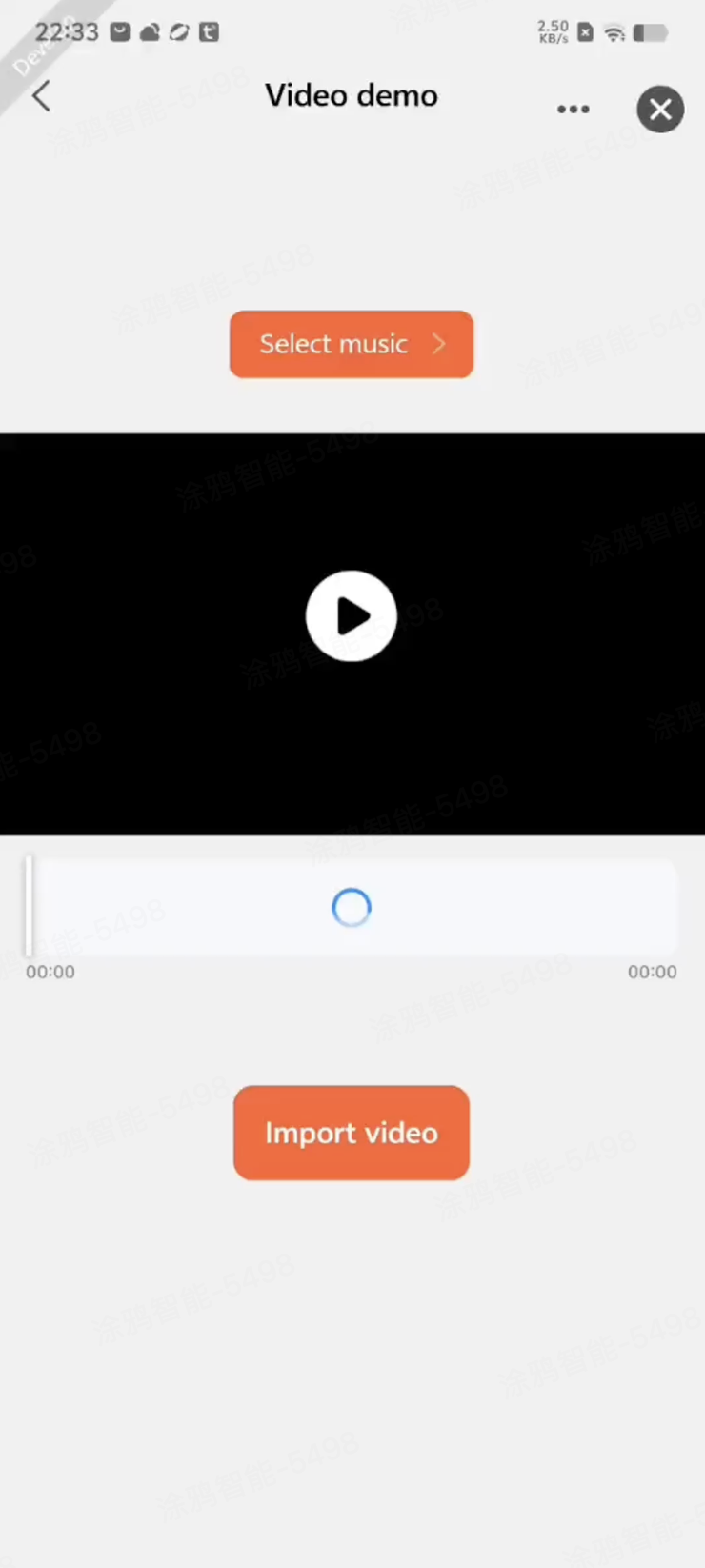

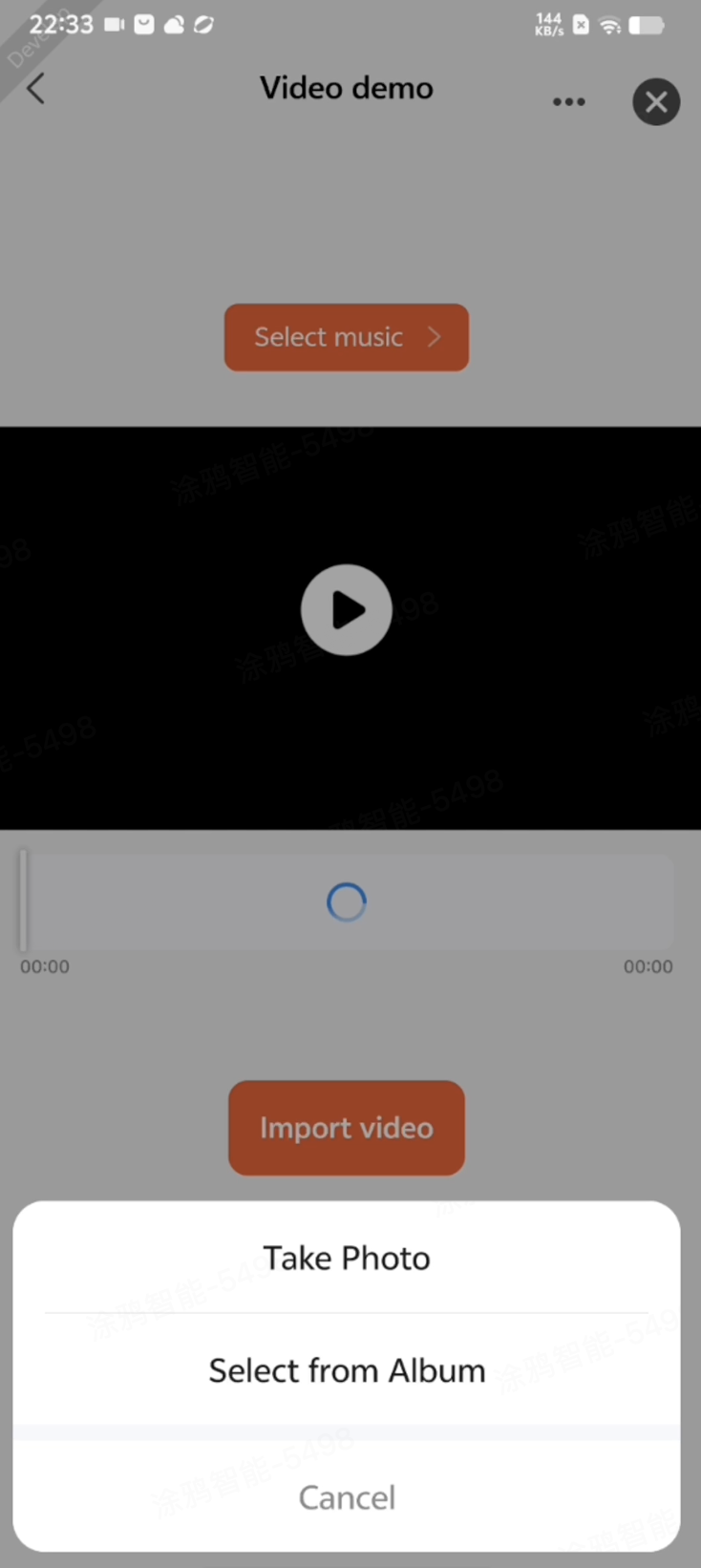

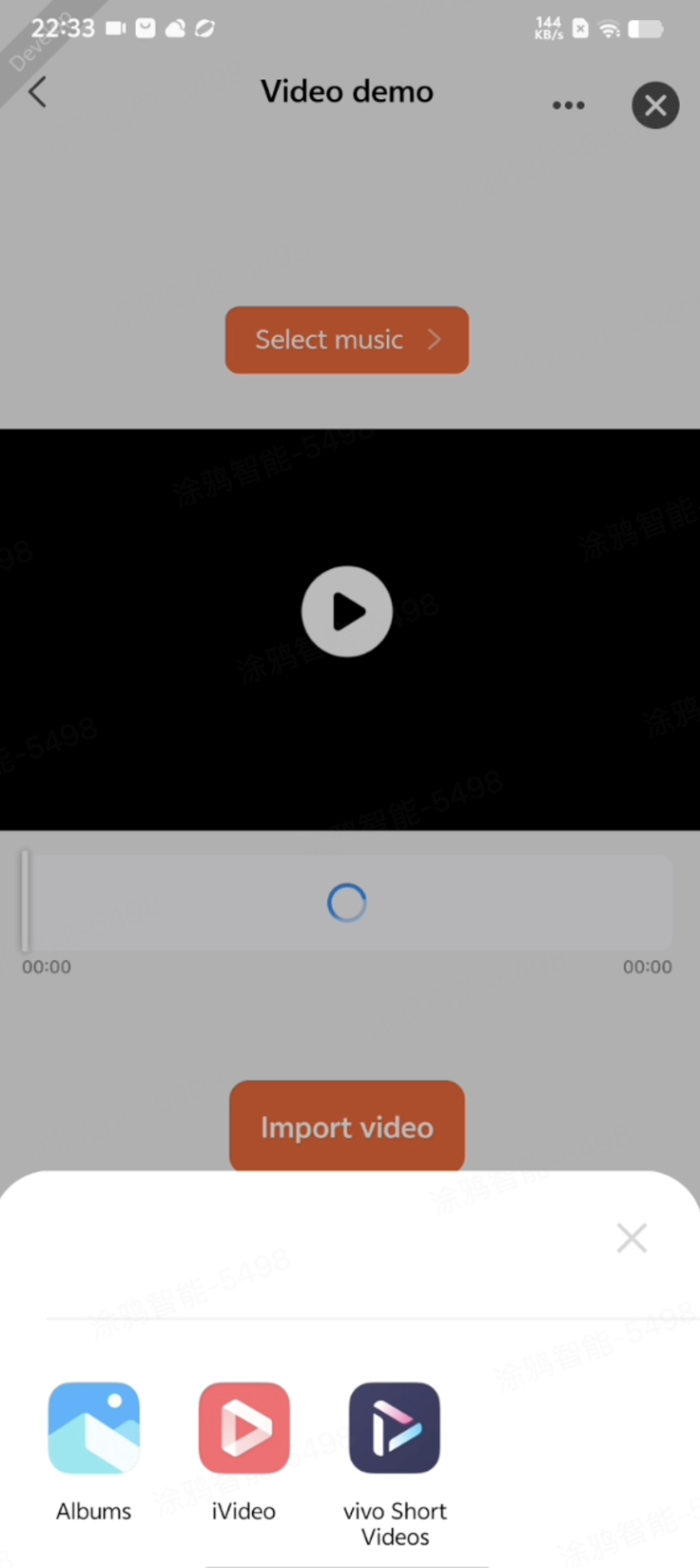

Import and initialize a video

Features

Video resources rely on the following two key capabilities during the initial import phase:

- User material collection

- End users can submit raw video materials through real-time recording or importing from their mobile photo gallery.

- Video pre-standardization

- Automatically unify the resolution and orientation of source video to prevent format discrepancies from disrupting subsequent AI video generation processes.

Code snippet

import { chooseMedia, clipVideo } from "@ray-js/ray";

// Due to system compatibility issues, this path is used for video display.

const [videoSrc, setVideoSrc] = useState("");

// Due to system compatibility issues, this path is used for video generation.

const [videoFileSrc, setVideoFileSrc] = useState("");

// Store the rendered image of the first frame of the video.

const [posterSrc, setPosterSrc] = useState("");

// Import the video resources.

const getVideoSourceList = () => {

paseVideo();

chooseMedia({

mediaType: "video",

sourceType: ["album", "camera"],

isFetchVideoFile: true,

success: (res) => {

initState();

const { tempFiles } = res;

console.log("==tempFiles", tempFiles);

clipVideo({

filePath: tempFiles[0].tempFilePath,

startTime: 0,

endTime: tempFiles[0].duration * 1000,

level: 4,

success: ({ videoClipPath }) => {

console.log("====clipVideo==success", videoClipPath);

setVideoSrc(videoClipPath);

setPosterSrc(tempFiles[0].thumbTempFilePath);

setHandleState("inputDone");

},

fail: (error) => {

console.log("====clipVideo==fail", error);

setHandleState("idle");

},

});

},

});

};

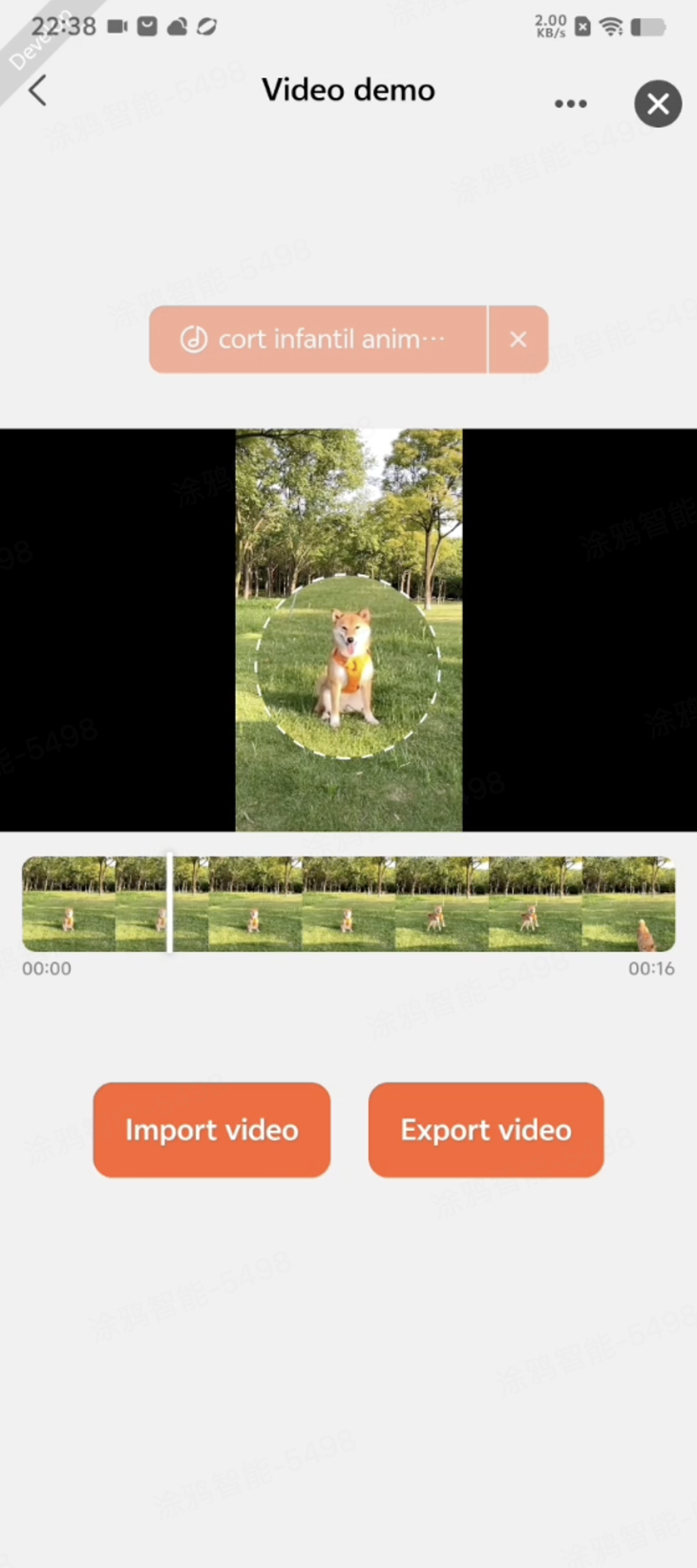

Generate a video with AI and export

Features

Generate a video with AI and export it. After editing video materials, end users can save the video to their mobile photo gallery.

Code snippet

import { saveVideoToPhotosAlbum, showToast } from "@ray-js/ray";

// Due to system compatibility issues, this path is used for video generation.

const [videoFileSrc, setVideoFileSrc] = useState("");

// Export the video resources.

const handleOutputVideo = () => {

saveVideoToPhotosAlbum({

filePath: videoFileSrc,

success: (res) => {

// Prompt that the video is exported successfully.

showToast({

title: Strings.getLang("dsc_output_video_success"),

icon: "success",

});

},

fail: (error) => {

console.log("==saveVideoToPhotosAlbum==fail", error);

},

});

};

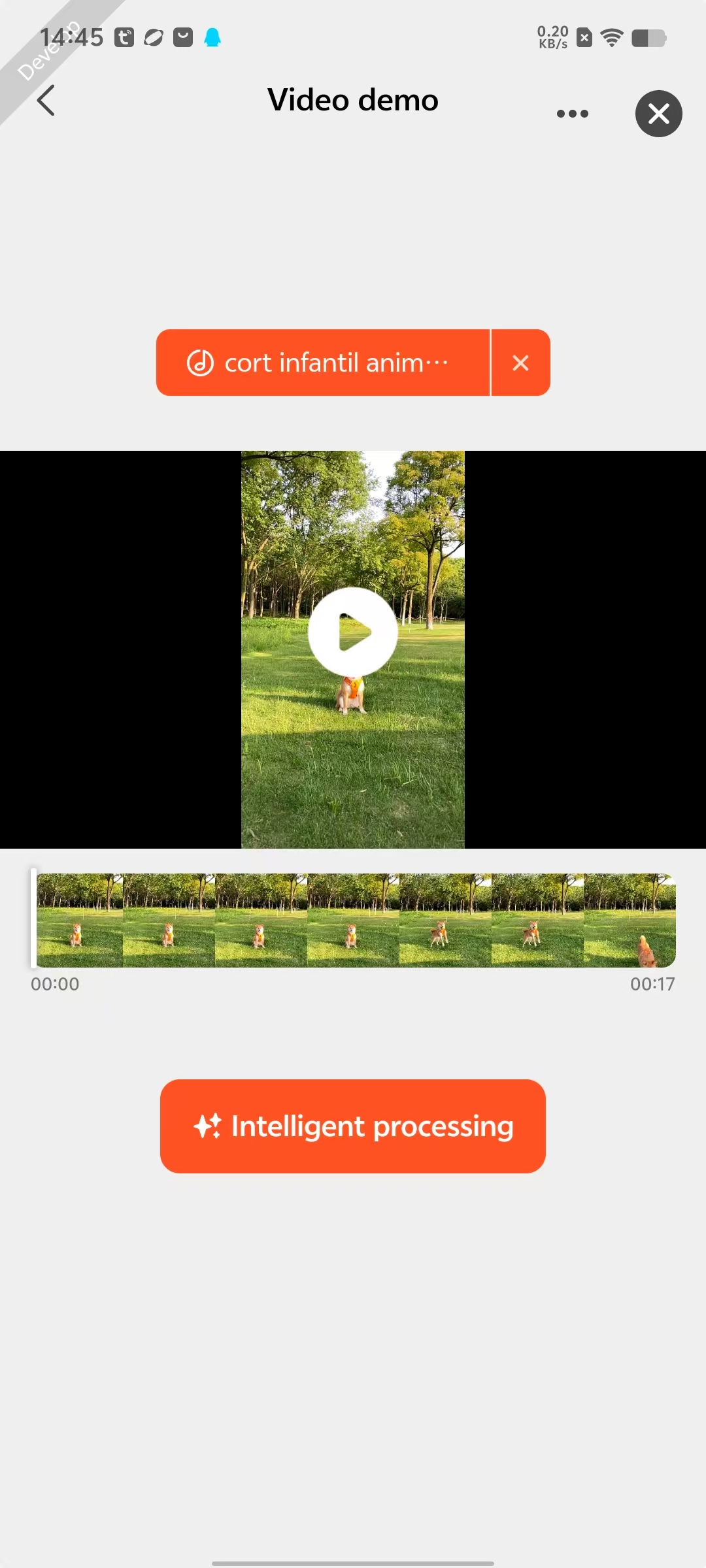

Video display component

Component introduction

The video display component is used to show original video materials and AI-generated video materials. End users can play and pause videos at any time. Moreover, a video progress bar is embedded in the component, so end users can slide to preview the video content.

Code snippet

import { saveVideoToPhotosAlbum, showToast } from "@ray-js/ray";

// Due to system compatibility issues, this path is used for video display.

const [videoSrc, setVideoSrc] = useState("");

// Store the rendered image of the first frame of the video.

const [posterSrc, setPosterSrc] = useState("");

<Video id="video-editor" type="previewer" src={videoSrc} poster={posterSrc} />;

Get the default background music

Features

Currently, this template provides a selection of default background music for you to access and use, with plans to extend the music library in the future. Accessing the default background music involves the following two steps:

- Get the online URL of the default background music.

- Download the online background music to a mobile phone's local storage.

Code snippet

import { backgroundMusicList, backgroundMusicDownload } from "@ray-js/ray";

const createLocalMusicPath = (idx: number) => {

const fileRoot = ty.env.USER_DATA_PATH;

const filePath = `${fileRoot}/music${idx + 1}.mp4`;

return filePath;

};

// Download all music.

const downloadMusic = (musicArray: Array<ItemMusic>) => {

// Use map to iterate over each URL and wrap the synchronous call with a Promise.

const downloadPromises = musicArray.map((music, index) => {

const localPath = createLocalMusicPath(index);

return new Promise((resolve, reject) => {

try {

backgroundMusicDownload({

musicUrl: music.musicUrl,

musicPath: localPath,

success: (res) => {

console.log("==backgroundMusicDownload==success", res);

resolve({

id: music.musicTitle,

...music,

musicLocalPath: localPath,

});

},

fail: ({ errorMsg }) => {

console.log("==backgroundMusicDownload==fail", errorMsg);

},

});

} catch (error) {

reject(error);

}

});

});

return Promise.all(downloadPromises)

.then((localMusicArray) => {

console.log("Download is completed:", localMusicArray);

return localMusicArray;

})

.catch((error) => {

console.error("Error occurred during download:", error);

});

};

// Initialize the list of local music resources.

const initLocalMusicSource = () => {

backgroundMusicList({

success: ({ musicList }) => {

console.log("===backgroundMusicList==", musicList);

downloadMusic(musicList)

.then((resList) => {

console.log("===downloadMusic==success", resList);

// Cache background music data in panel redux.

dispatch(updateMusicInfoList(resList));

})

.catch((error) => {

console.log("===downloadMusic==fail", error);

});

},

fail: ({ errorMsg }) => {

console.log("====backgroundMusicList==fail", errorMsg);

},

});

};

//Complete background music initialization when the panel is initialized.

initLocalMusicSource();

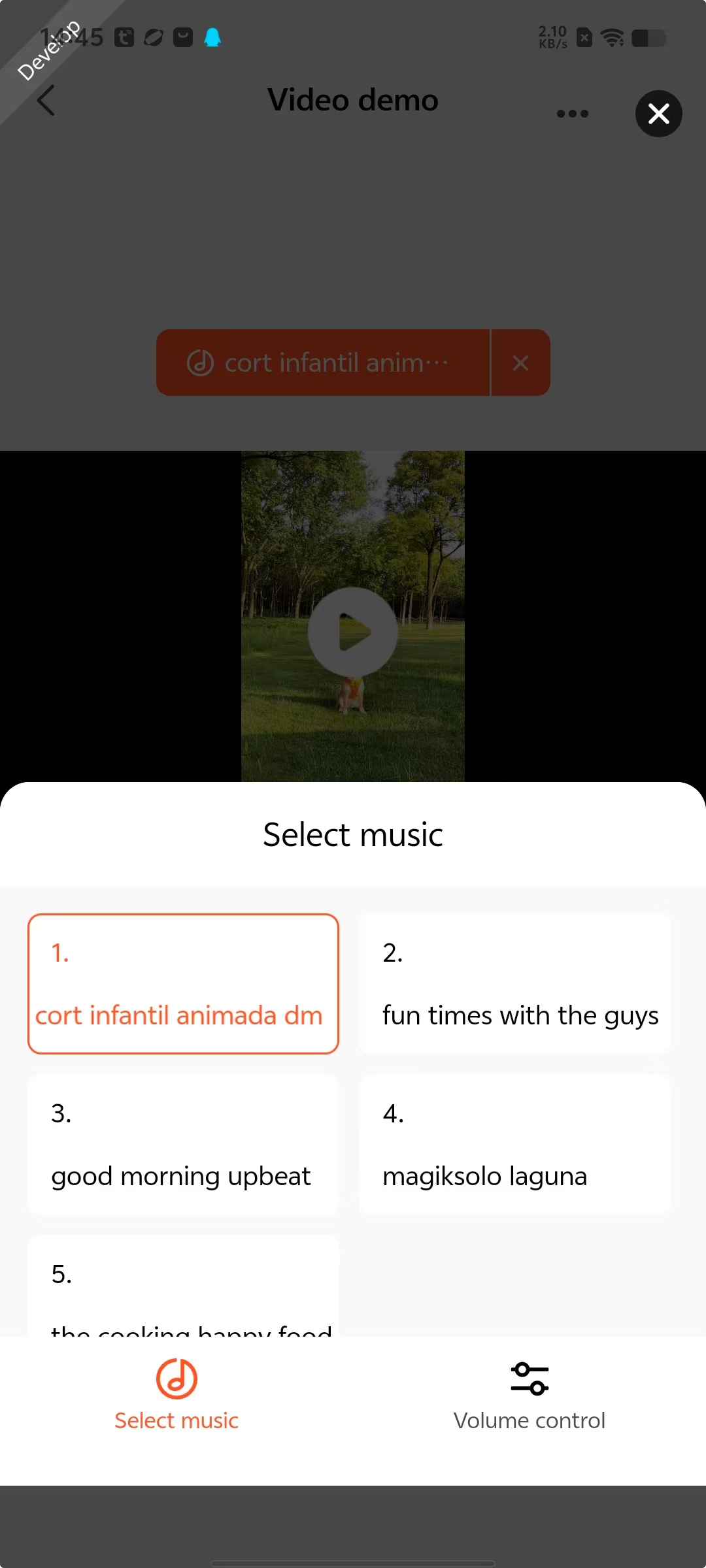

Display and preview default background music

Features

The template provides the LocalMusicList component for displaying and previewing background music. The music preview capability is primarily implemented through an InnerAudioContext instance.

Code snippet

import { createInnerAudioContext } from "@ray-js/ray";

// Initialize the audioManager instance.

const audioManager = createInnerAudioContext({

success: (res) => {

console.log("==createInnerAudioContext==success", res);

},

fail: (error) => {

console.log("==createInnerAudioContext==fail", error);

},

});

// Play local music through local path.

audioManager.play({

src: currentMusic.musicLocalPath,

success: (res) => {

console.log("==play==success", res);

},

fail: (error) => {

console.log("==play==fail", error);

},

});

// Pause the music playback.

audioManager.pause({

success: (res) => {

console.log("==stop==pause", res);

},

fail: (error) => {

console.log("==stop==pause", error);

},

});

// Destroy the audio management instance.

audioManager.destroy({

success: (res) => {

console.log("==destroy==success", res);

},

fail: (error) => {

console.log("==destroy==fail", error);

},

});

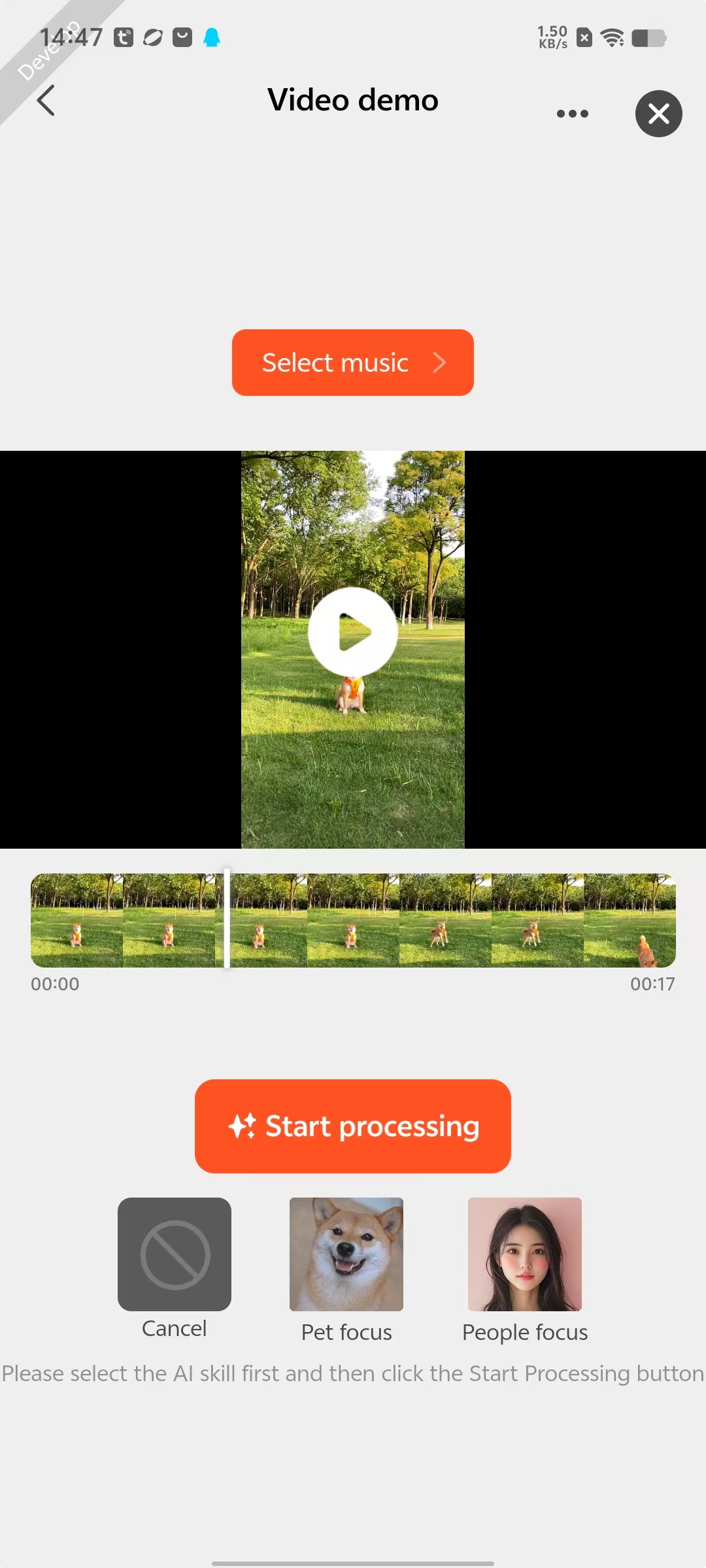

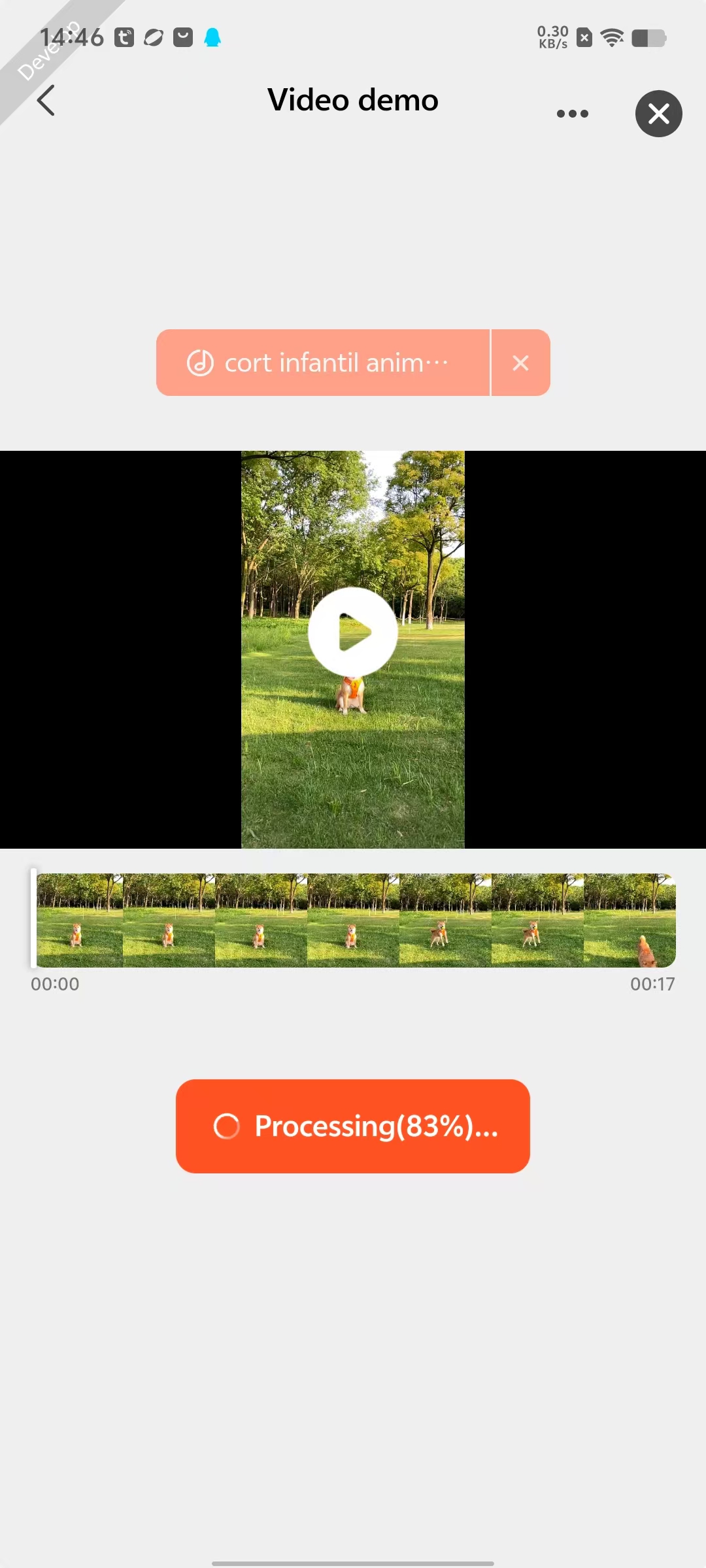

Highlight pets and humans

Features

End users can select their preferred subject category (such as pets and humans) in the template, and the AI will automatically handle videos to highlight the chosen subjects.

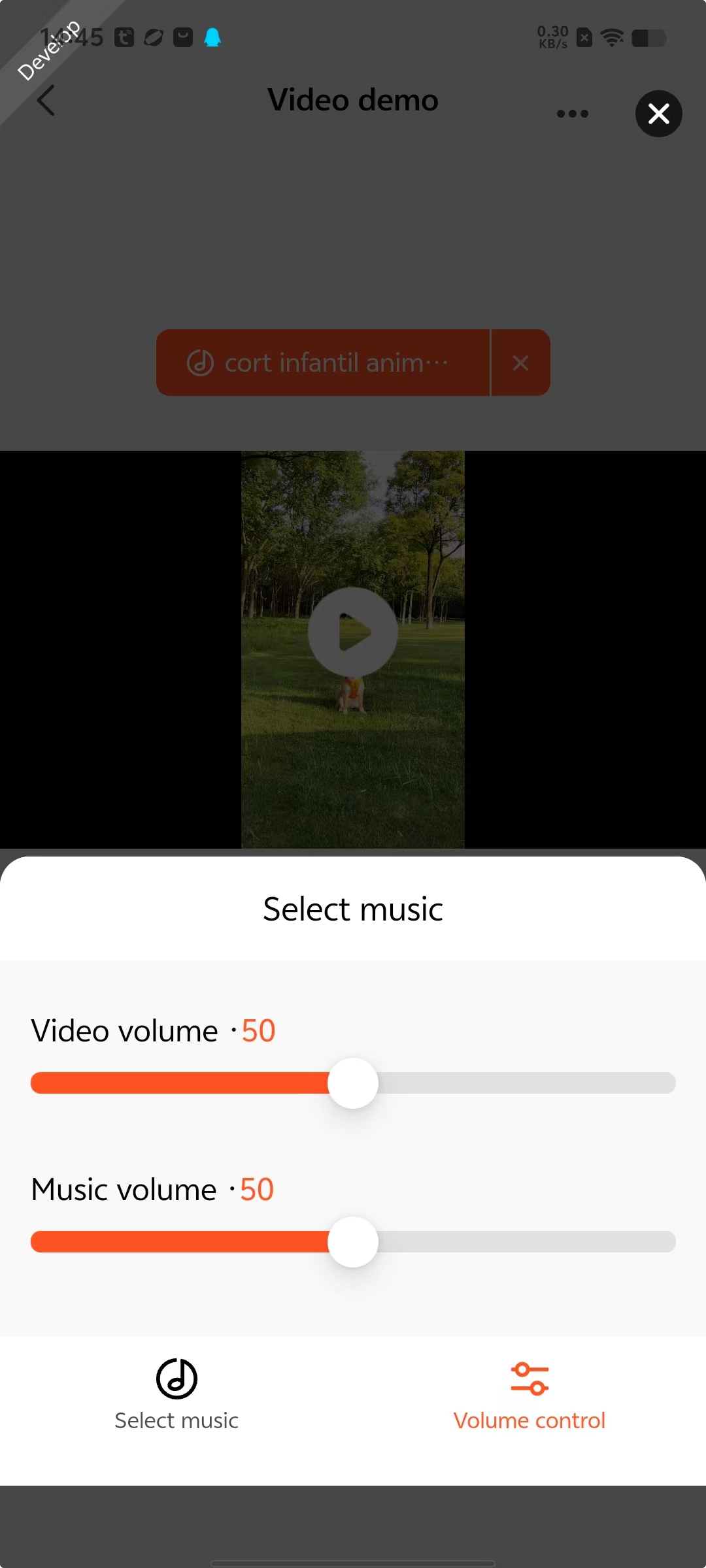

Edit background music in the video

Features

End users can select the background music they want to export with the AI video, and customize the mixing ratio of the original video audio and background music.

Code snippets for key APIs

import { ai } from "@ray-js/ray";

import { createTempVideoRoot } from "@/utils";

const {

objectDetectCreate,

objectDetectDestroy,

objectDetectForVideo,

objectDetectForVideoCancel,

offVideoObjectDetectProgress,

onVideoObjectDetectProgress,

} = ai;

// The editing status of the AI-generated video.

const [handleState, setHandleState] = useState("idle");

// The processing progress of the AI-generated video.

const [progressState, setProgressState] = useState(0);

// Register an app AI instance.

useEffect(() => {

objectDetectCreate();

return () => {

// Destroy the app AI instance when the page is destroyed.

objectDetectDestroy();

audioManager.destroy({

success: (res) => {

console.log("==destroy2==success", res);

},

fail: (error) => {

console.log("==destroy2==fail", error);

},

});

};

}, []);

// AI video stream processing features.

const handleVideoByAI = (

detectType: number,

imageEditType: number,

musicPath = ""

) => {

// It is recommended to turn off music playback when generating AI highlight videos to prevent potential system errors.

paseVideo();

// Generate an export path for AI subject highlight videos.

const tempVideoPath = createTempVideoRoot();

// Register a progress listener for the generation of AI subject highlight videos.

onVideoObjectDetectProgress(handleListenerProgress);

objectDetectForVideo({

inputVideoPath: videoSrc,

outputVideoPath: tempVideoPath,

detectType,

musicPath,

originAudioVolume: volumeObj.video / 100,

overlayAudioVolume: volumeObj.music / 100,

imageEditType,

audioEditType: 2,

success: ({ path }) => {

// Unregister the progress listener for the generation of the AI subject highlight videos.

offVideoObjectDetectProgress(handleListenerProgress);

setProgressState(0);

fetchVideoThumbnails({

filePath: path,

startTime: 0,

endTime: 1,

thumbnailCount: 1,

thumbnailWidth: 375,

thumbnailHeight: 212,

success: (res) => {

setHandleState("success");

setVideoSrc(path);

showToast({

title: Strings.getLang("dsc_ai_generates_success"),

icon: "success",

});

},

fail: ({ errorMsg }) => {

console.log("==fetchVideoThumbnails==fail==", errorMsg);

},

});

},

fail: ({ errorMsg }) => {

console.log("==objectDetectForVideo==fail==", errorMsg);

offVideoObjectDetectProgress(handleListenerProgress);

setProgressState(0);

setHandleState("fail");

setHandleState("selectSkill");

setIsShowAISkills(true);

showToast({

title: Strings.getLang("dsc_ai_generates_fail"),

icon: "error",

});

},

});

};

// Interrupt the generation of AI subject highlight videos.

const handleCancelAIProcess = () => {

objectDetectForVideoCancel({

success: () => {

setHandleState("select");

},

});

};

In-depth introduction of specific AI technology solutions can be found in the Video Solution: Video Subject Highlighting Solution.

Detailed API-related introduction can be found in the Developer Documentation: AI Basic Package

- Congrats! 🎉You have finished learning this guide.

- If you have any problem during the development, you can contact Tuya's Smart MiniApp team for troubleshooting.