Video Detection

Last Updated on : 2023-08-09 09:25:02download

Video detection is used to identify and analyze objects in a video, such as human shapes, pets, and vehicles, and calculate their coordinates and scores. You can report the results of up to three objects simultaneously.

How it works

Human shape detection and pet detection work using similar logic. Send the captured video in YUV or RGB format to the detection module for object analysis and coordinates calculation.

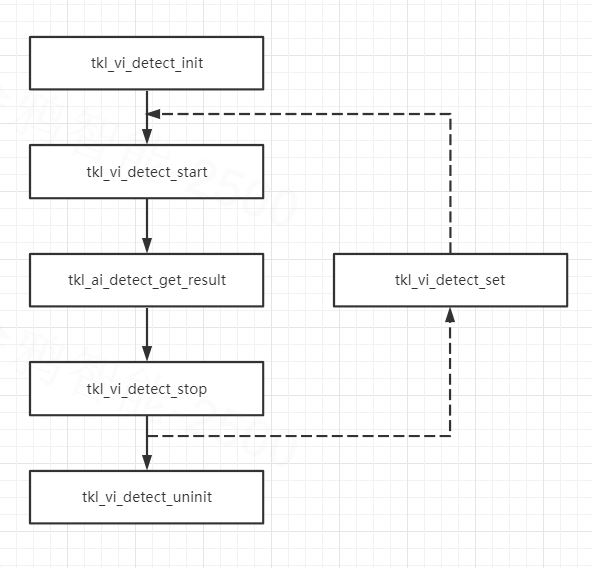

API call process

Data structure

Detection type

Video detection can identify human shapes and pets. The specific feature available depends on the chip platform you use.

typedef enum {

TKL_MEDIA_DETECT_TYPE_HUMAN = 0, // Human shape.

TKL_MEDIA_DETECT_TYPE_PET, // Pet, such as cat and dog.

TKL_MEDIA_DETECT_TYPE_CAR, // Vehicle.

TKL_MEDIA_DETECT_TYPE_FACE, // Face.

TKL_MEDIA_DETECT_TYPE_FLAME, // Flame.

TKL_MEDIA_DETECT_TYPE_BABY_CRY, // Baby cry.

TKL_MEDIA_DETECT_TYPE_DB, // Decibel.

TKL_MEDIA_DETECT_TYPE_MOTION, // Motion.

TKL_MEDIA_DETECT_TYPE_TRIP, // Tripwire.

TKL_MEDIA_DETECT_TYPE_PERI, // Perimeter.

} TKL_MEDIA_DETECT_TYPE_E;

Specify detection parameters

The Fullhan platform, including models like FH8636 and FH8652, requires passing in model parameters. However, other platforms do not need to do so.

typedef struct{

CHAR_T *pmodel; // The pointer to the detection model.

INT32_T model_len; // The length of the detection model.

}TKL_VI_DETECT_CONFIG_T;

Struct of detection results

typedef struct

{

FLOAT_T x; // The x-coordinate of the rectangle | [0.0 - 1.0]

FLOAT_T y; // The y-coordinate of the rectangle | [0.0 - 1.0]

FLOAT_T width; // The width of the rectangle | [0.0 - 1.0]

FLOAT_T height; // The height of the rectangle | [0.0 - 1.0]

} TKL_VI_RECT_T;

typedef struct

{

INT32_T x;

INT32_T y;

} TKL_VI_POINT_T;

typedef struct

{

TKL_VI_RECT_T draw_rect; // coordinate region

FLOAT_T score; // score | [0.0 - 1.0]

TKL_MEDIA_DETECT_TYPE_E type; // The object type, such as human shape, pet, and flame.

} TKL_VI_DETECT_TARGET_T;

typedef struct

{

int value; // 0: No motion detected. 1: Motion detected.

TKL_VI_POINT_T motion_point; // The coordinates of the motion's center point. The coordinates of the rectangle's center are (0, 0).

} TKL_VI_MD_RESULT_T;

typedef struct

{

INT32_T count;

TKL_VI_DETECT_TARGET_T target[TKL_VI_TARGET_MAX];

union{

TKL_VI_MD_RESULT_T md;

};

} TKL_VI_DETECT_RESULT_T;

Detection parameters

typedef struct

{

UINT32_T roi_count; // The number of regions of interest (ROI).

TKL_VI_RECT_T roi_rect[TKL_VI_MD_ROI_RECT_MAX]; // The ROI rectangle.

INT32_T track_enable; // Enable motion tracking.

} TKL_VI_MD_PARAM_T;

typedef struct

{

UINT32_T point_count; // The number of points.

TKL_VI_POINT_T point[TKL_VI_PERI_POINT_MAX]; // Point

} TKL_VI_PERI_PARAM_T;

typedef struct

{

INT32_T sensitivity;

union{

TKL_VI_MD_PARAM_T md;

TKL_VI_PERI_PARAM_T peri;

};

} TKL_VI_DETECT_PARAM_T;

API description

Initialize detection

The IPC has only one sensor and supports one channel, so specify chn as 0.

/**

* @brief detect init

*

* @param[in] chn: vi chn

* @param[in] type: detect type

* @param[in] pconfig: config

*

* @return OPRT_OK on success. Others on error, please refer to tkl_error_code.h

*/

OPERATE_RET tkl_vi_detect_init(TKL_VI_CHN_E chn, TKL_MEDIA_DETECT_TYPE_E type, TKL_VI_DETECT_CONFIG_T *p_config);

Start detection

/**

* @brief detect start

*

* @param[in] chn: vi chn

* @param[in] type: detect type

* @return OPRT_OK on success. Others on error, please refer to tkl_error_code.h

*/

OPERATE_RET tkl_vi_detect_start(TKL_VI_CHN_E chn, TKL_MEDIA_DETECT_TYPE_E type);

Get the detection result

/**

* @brief get detection results

*

* @param[in] chn: vi chn

* @param[in] type: detect type

* @param[out] presult: detection results

*

* @return OPRT_OK on success. Others on error, please refer to tkl_error_code.h

*/

OPERATE_RET tkl_vi_detect_get_result(TKL_VI_CHN_E chn, TKL_MEDIA_DETECT_TYPE_E type, TKL_VI_DETECT_RESULT_T *presult);

Set detection parameters

/**

* @brief set detection param

*

* @param[in] chn: vi chn

* @param[in] type: detect type

* @param[in] pparam: detection param

*

* @return OPRT_OK on success. Others on error, please refer to tkl_error_code.h

*/

OPERATE_RET tkl_vi_detect_set(TKL_VI_CHN_E chn, TKL_MEDIA_DETECT_TYPE_E type, TKL_VI_DETECT_PARAM_T *pparam);

Deinitialize detection

/**

* @brief detect uninit

*

* @param[in] chn: vi chn

* @param[in] type: detect type

*

* @return OPRT_OK on success. Others on error, please refer to tkl_error_code.h

*/

OPERATE_RET tkl_vi_detect_uninit(TKL_VI_CHN_E chn, TKL_MEDIA_DETECT_TYPE_E type);

Example

// Human shape detection:

static char *load_models(char *modelPath, int *p_size)

{

int32_t readLen;

int32_t fileLen;

FILE *fp;

if (!(fp = fopen(modelPath, "rb"))) {

printf("Error: open %s failed\n", modelPath);

return NULL;

}

fseek(fp, 0, SEEK_END);

fileLen = ftell(fp);

fseek(fp, 0, SEEK_SET);

char *buf = malloc(fileLen);

if (buf == NULL) {

printf("Error: malloc model buf failed, size = %d\n", fileLen);

fclose(fp);

return NULL;

}

readLen = fread(buf, 1, fileLen, fp);

if (readLen < fileLen) {

printf("Error: read model file failed, fileLen = %d, readLen = %d\n", fileLen, readLen);

free(buf);

buf = NULL;

}

fclose(fp);

*p_size = readLen;

return buf;

}

...

char *modelPath = "/mnt/sdcard/person_pet.nbg";

TKL_VI_DETECT_CONFIG_T model_config = {0};

model_config .pmodel = load_models(modelPath, &model_config .model_len);

if (model_config .pmodel == NULL){

printf("load_models failed!\n");

return;

}

ret = tkl_vi_detect_init(0, TKL_MEDIA_DETECT_TYPE_HUMAN, pconfig);

...

ret = tkl_vi_detect_start(0, TKL_MEDIA_DETECT_TYPE_HUMAN);

...

ret = tkl_vi_detect_get_result(0, TKL_MEDIA_DETECT_TYPE_HUMAN, &result);

...

ret = tkl_vi_detect_stop(0, TKL_MEDIA_DETECT_TYPE_HUMAN);

...

free(model_config.pmodel);

// Pet detection and human shape detection use the same API call process. Refer to the process of human shape detection.

Things to note

If the detection algorithm is CPU-intensive, you can call tkl_vi_detect_stop to stop the detection to lower CPU usage if the detection result is not needed.

Is this page helpful?

YesFeedbackIs this page helpful?

YesFeedback